Verifiable Privacy and Transparency: A new frontier for Brave AI privacy

Today, Brave Leo offers a new capability for cryptographically-verifiable privacy and transparency by deploying LLMs with NEAR AI Nvidia-backed Trusted Execution Environments (aka “TEEs”, see “Know more about TEEs” section below for details). Brave believes that users must be able to verify that they are having private conversations with the model they expect. This is available in Brave Nightly (our testing and development channel) for early experimentation with DeepSeek V3.1 (we plan to extend this to more models in the future based on feedback).

By integrating Trusted Execution Environments, Brave Leo moves towards offering unmatched verifiable privacy and transparency in AI assistants, in effect transitioning from the “trust me bro” process to the privacy-by-design approach that Brave aspires to: “trust but verify”.

Brave believes in user-first AI

Leo is the Brave browser’s integrated, privacy-preserving AI assistant. It is powered by state-of-the-art LLMs while protecting user privacy through a privacy-preserving subscription model, no chats in the cloud, no context in the cloud, no IP address logging, and no training on users’ conversations.

Brave believes that users must be able to:

-

Verifiable Privacy—Users must be able to verify that Leo’s privacy guarantees match public privacy promises.

-

Verifiable Transparency in Model Selection—Users must be able to verify that Leo’s responses are, in fact, coming from a machine learning model the user expects (or pays for, in the case of Leo Premium).

The absence of these user-first features in other competing chatbot providers introduces a risk of privacy-washing. It has also been shown—both in research (e.g., “Are You Getting What You Pay For? Auditing Model Substitution in LLM APIs”) and in practice (e.g., backlash against ChatGPT)—that chatbot providers may have incentives to silently replace an expensive LLM with a cheaper-to-run, weaker LLM, and to return the results from the weaker model to the user in order to reduce GPU costs and increase profit margins.

Brave moves towards Verifiable Privacy and Transparency in LLMs through Confidential Computing

Brave begins this journey by removing the need to trust LLM/API providers, using Confidential LLM Computing on NEAR AI TEEs. Brave uses NEAR AI TEE-enabled Nvidia GPUs to ensure confidentiality and integrity by creating secure enclaves where data and code are processed with encryption.

These TEEs produce a cryptographic attestation report that includes measurements (hashes) of the loaded model and execution code. Such attestation reports can be cryptographically verified to gain absolute assurance that:

-

A secure environment is created through a genuine Nvidia GPU TEE, which generates cryptographic proofs of its integrity and configuration.

-

Inference runs in this secure environment with full encryption that keeps user data private — no one can see any data passed by the user to the computation, or any results of the computation.

-

The model and open source code that users expected are running unmodified by cryptographically signing every computation.

In Stage 1 of our development, Brave performs the cryptographic verification, and users can use “Verifiably Private with NEAR AI TEE” in Leo as follows:

-

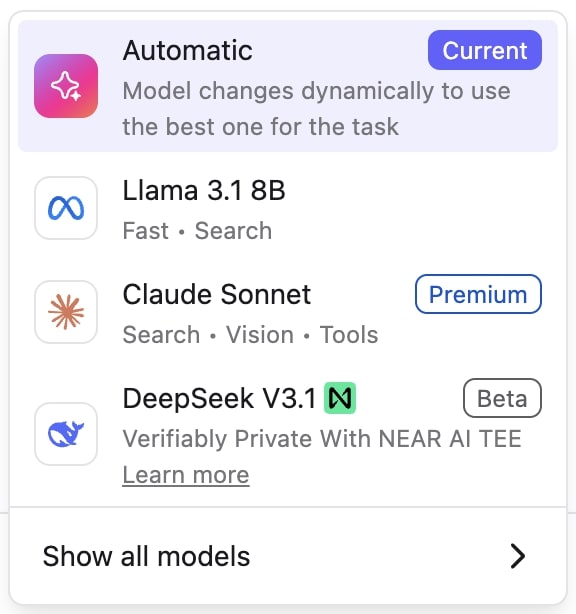

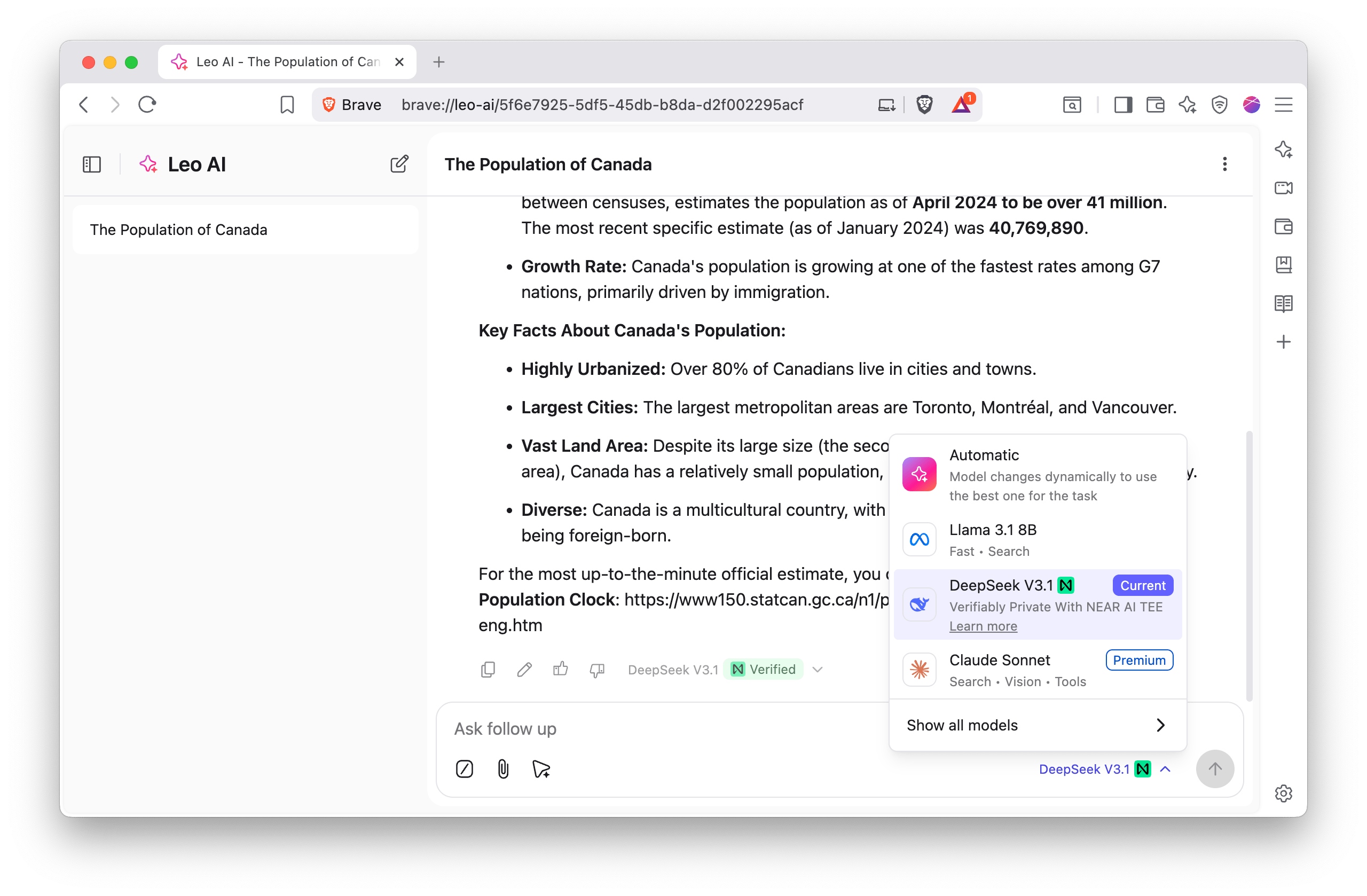

User selects DeepSeek V3.1 with label of Verifiably Private with NEAR AI TEE available in Leo on Brave Nightly

-

Brave performs NEAR AI TEE Verification, validating a cryptographic chain from NEAR open-source code to hardware-attestation execution, ensuring responses are generated in a genuine Nvidia TEE running a specific version of the NEAR open-source server

-

Brave transmits the outcome of verification to users by showing a verified green label (depicted in the screenshot below)

-

User starts chatting without having to trust the API provider to see or log their data and responses

The future of Verifiable Privacy and Transparency in Brave Leo

Today, we are excited to release TEE-based Confidential DeepSeek V3.1 Computing in Brave Nightly (our testing and development channel) for early experimentation and feedback.

Before rolling this out more broadly, we’re focused on two things:

-

No user-facing performance overhead—TEEs introduce some computational overhead on the GPU. However, recent advances significantly reduce this overhead—down to nearly zero in some cases—as shown in Confidential Computing on NVIDIA Hopper GPUs: A Performance Benchmark Study. We want to ensure our users don’t experience performance regressions.

-

End-to-end verification—We’re actively researching how to extend confidential computing in Leo so that users can verify their trust in the entire pipeline, along with Brave open-sourcing all stages. In particular, we are planning to move the verification closer to users so they can reconfirm the API verification on their own in the Brave browser.

More about Trusted Execution Environments

A Trusted Execution Environment (TEE) is a secure area of a processor that provides an isolated computing environment, separate from traditional rich runtime environments such as an Operating System (OS). A TEE enforces strong hardware-backed guarantees of confidentiality and integrity for the code and data it hosts. These guarantees are achieved through enforcements such as dedicated memory that is accessible only to the TEE.

Hardware isolation ensures that even a fully compromised OS cannot access or tamper with any code or data residing inside the TEE. In addition to this, TEEs expose unique hardware primitives such as secure boot and remote attestation to ensure only trusted code is loaded into the TEE and so that external parties are able to verify the integrity of TEE.

Chip manufacturers have enabled TEEs on CPUs (traditionally) and GPUs (recently). The combination of TEE-enabled CPUs (e.g., Intel TDX) and TEE-enabled GPUs (e.g., Nvidia Hopper) enables end-to-end confidentiality, and integrity-preserving computation of LLM inference with minimal performance penalty.