Introducing AI Grounding with Brave Search API, providing enhanced search performance in AI applications

Today, we’re launching AI Grounding with the Brave Search API—a robust, all-in-one solution for connecting an AI system’s outputs to verifiable data sources. With AI Grounding, LLM (Large Language Model) responses are anchored in high-quality, factual information from verifiable Web sources, thus reducing hallucinations and responding more appropriately to nuanced inputs.

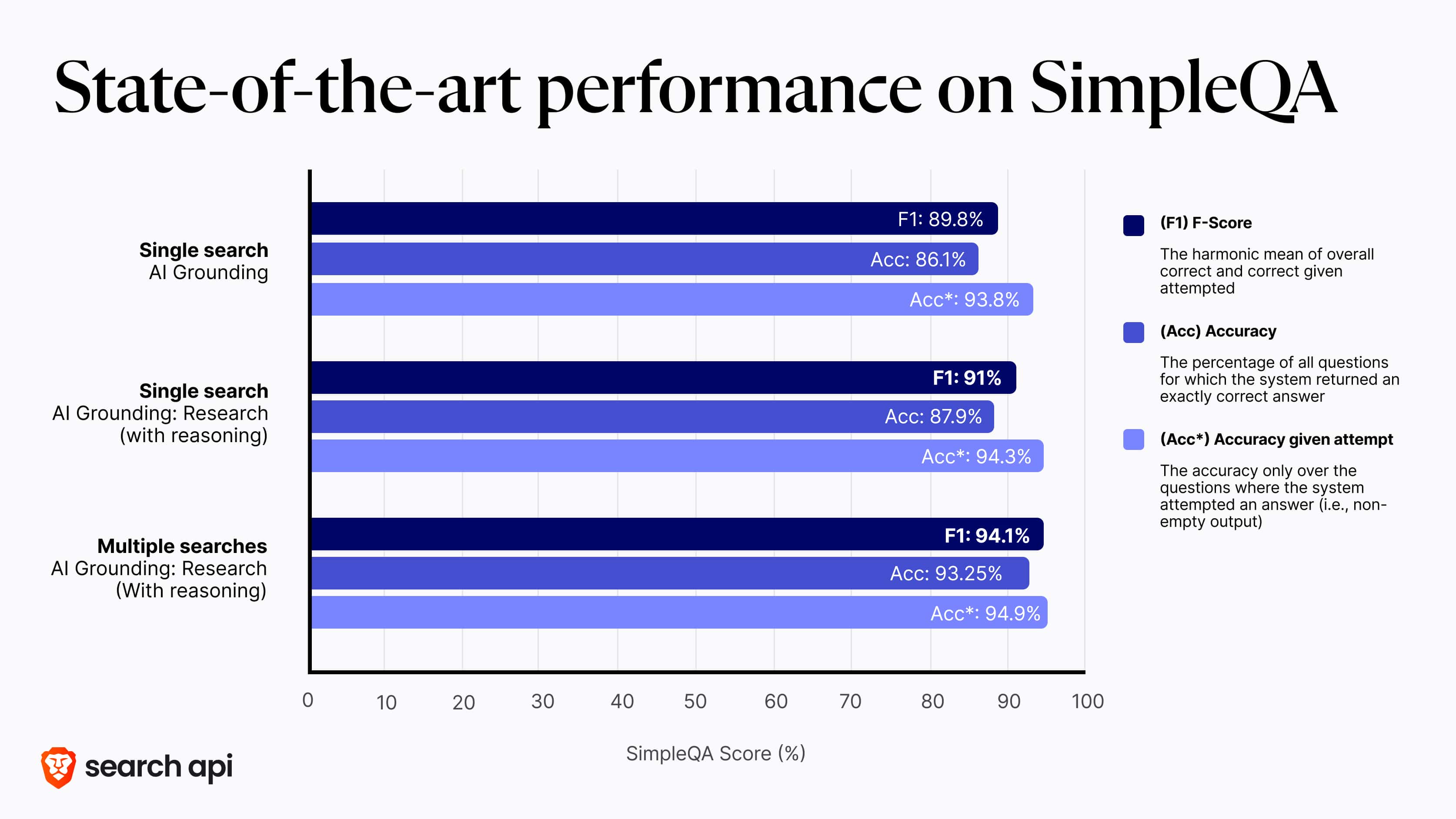

Answers generated using AI Grounding achieve state-of-the-art (SOTA) performance on the SimpleQA benchmark, with an F1-score of 94.1%. Notably, Brave’s AI Grounding service achieves these results without being specifically optimized for the SimpleQA benchmark—the performance emerges as a natural byproduct of the system’s design.

Brave’s AI Grounding already powers the Answer with AI feature on Brave Search, which handles over 15 million queries daily, and will soon support the upcoming Research-mode features in Brave Search as well as in Brave Leo, the Brave browser’s integrated AI assistant. The Brave Search API already supplies most of the top 10 AI LLMs with real-time Web search data, and for some of them, Brave is in fact the only search engine index supporting their AI answers.

Getting started with AI Grounding

Beginning today, customers of the Brave Search API can access the new AI Grounding endpoint by subscribing to one of our public AI plans—Free, Base, or Pro—or by contacting us about custom plans. A high-level overview of what’s new with this release includes:

- AI Grounding plan (new): Answers are grounded on a single search or the more advanced Research mode (multiple searches, reasoning, planning and answer). This plan is priced at $4 per thousand web searches plus $5 per million tokens (input and output).

- Pro AI plan (update): Get access to this update via the existing openapi-compliant endpoint. The pricing of the Pro AI plan does not change.

Technical notes

Quality assessment at Brave relies on both AI-based evaluations and human assessments. These human evaluations are conducted by a dedicated team trained specifically to ensure consistency and coherence. The assessment process encompasses a wide range of dimensions—from navigational queries to blind evaluations of final outputs and more.

One of the AI tests we used is SimpleQA, a benchmark developed by OpenAI to assess the factual accuracy of large language models (LLMs) when answering short, fact-seeking questions. It focuses on questions with single, indisputable, and timeless answers, allowing straightforward evaluation using an LLM as a judge. The dataset includes 4,332 questions spanning diverse domains such as history, science, technology, art, and entertainment.

Although originally intended to evaluate LLMs without access to external information, SimpleQA has also been used to test grounding capabilities—that is, how well LLMs perform when given contextual information from the Web rather than relying solely on their internal knowledge.

Since the SimpleQA benchmark is frequently used as a proxy for measuring the factual accuracy of LLMs augmented with search-based information retrieval, we will examine how Brave compares to others in the industry and offer a detailed interpretation of the benchmark and some of its limitations.

Comparison to other API providers

An important yet often overlooked factor when evaluating performance on the SimpleQA benchmark is the number of search queries issued per question. This distinction—between single-search and multi-search strategies—can significantly influence both cost and user experience, yet it is rarely emphasized in benchmark discussions.

The choice between single-search and multi-search approaches has foundational implications:

- Single-search systems issue one query to the Web, pass the results to an LLM, and generate an answer. This method is fast and cost-efficient—on average, responses from Brave Search finish streaming in under 4.5 seconds, with minimal computational overhead.

- Multi-search systems, by contrast, perform sequential searches. The LLM iteratively refines its understanding of the query and issues additional searches, leading to:

- Higher API call volumes

- Larger context windows to process

- Increased reasoning time and compute cost

Multi-search systems often stretch response times into the minutes, making them more suitable for background or high-accuracy tasks where latency is less critical. For real-time applications, single-search remains the optimal solution.

The Brave Search API demonstrates state-of-the-art (SOTA) performance in both single-search and multi-search configurations:

| Provider | Model | Single Search | Multiple Searches |

|---|---|---|---|

| Brave | AI Grounding |

|

- |

| AI Grounding: Research With Reasoning |

|

|

|

| Perplexity | Sonar Pro ↗ |

|

- |

| Sonar ↗ |

|

- | |

| Deep Research ↗ | - |

|

|

| Tavily | Tavily + GPT 4.1 ↗ | - |

|

| Exa | Unknown ↗ | - |

|

| Exa Research ↗ | - |

|

|

| Exa Research Pro ↗ | - |

|

Table 1: SimpleQA benchmark scores. Metrics include F1-score (F1), Accuracy (Acc), and Accuracy Given Attempted (Acc*).

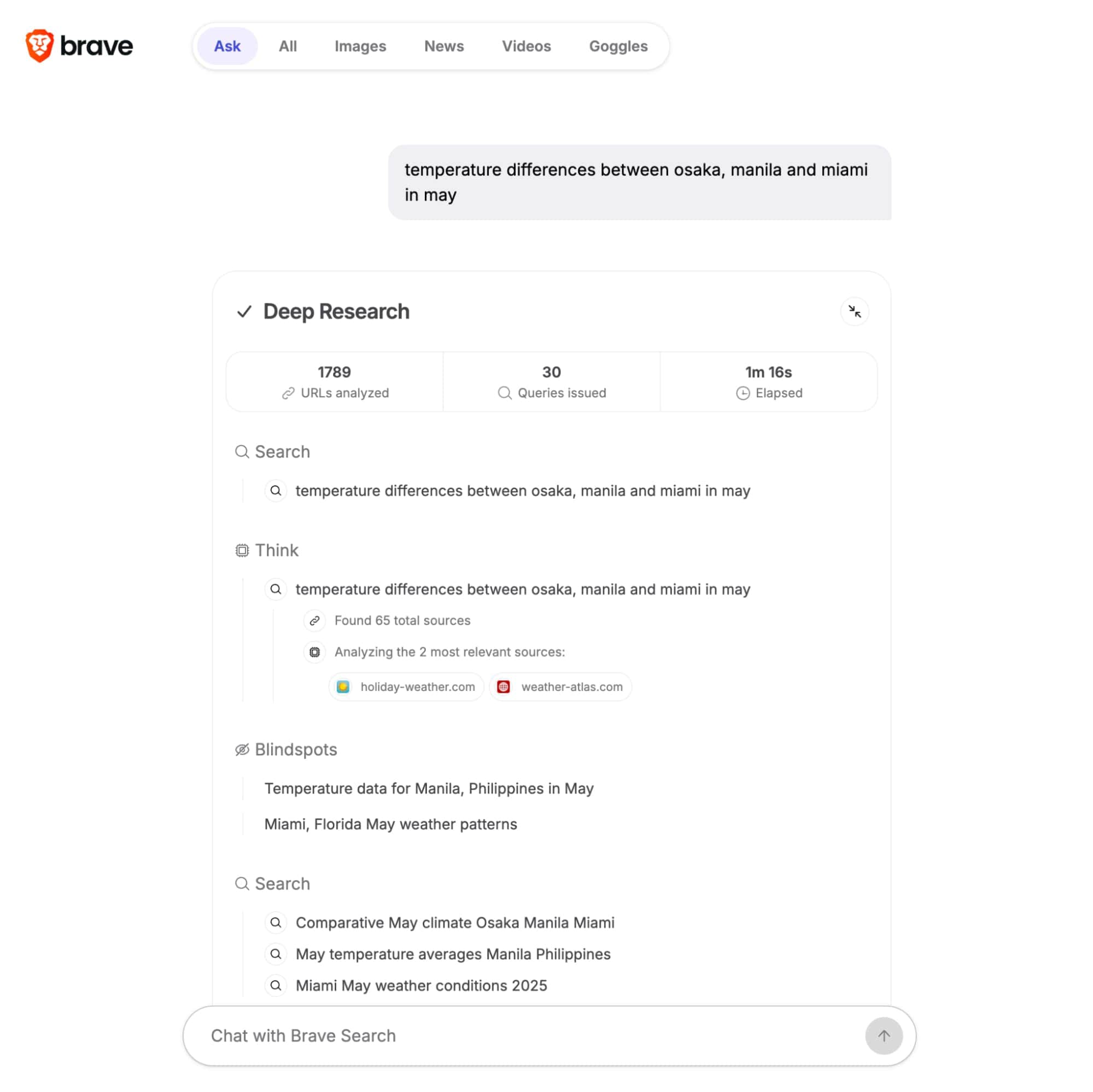

Brave can answer more than half of the questions in the benchmark using a single search, with a median response time of 24.2 seconds. On average (arithmetic mean), answering these questions involves issuing 7 search queries, analyzing 210 unique pages (containing 6,257 statements or paragraphs), and takes 74 seconds to complete. The fact that most questions can be resolved with just a single query underscores the high quality of results returned by Brave Search.

Why independence matters

Google and Bing, the first and second largest search engines, do not make their search engines broadly available to other businesses. Bing’s choice to first raise their prices in 2023 and this year to shut down their search API altogether, leaves Brave as the only independent and commercially viable search engine API at the full scale of the global Web. Both Google and Microsoft offer limited web search extensions for their AI APIs but these remain narrow in scope, come bundled with AI inference, and are prohibitively expensive for large business use cases.

Customers should also be aware of the risks associated with using non-independent search engine APIs that rely on scraping Google, Bing, or Brave results. The first and foremost is supply-chain risk. Without independence, Bing, Google and Brave can neutralize these scrapers (and those that rely on them) should they decide to invest in better protection or take legal action against them. The likelihood of that happening grows ever more as Google and Microsoft take further steps to protect their data moats, as evidenced by Microsoft shutting down the Bing API after years of operation.

Caveats of SimpleQA

In our view, the most important takeaway from the SimpleQA benchmark is that capable open-source models equipped with search engine access significantly outperform top-tier models that operate without retrieval capabilities. However, SimpleQA in its current form comes with important caveats and nuances. These must be acknowledged to move beyond the simplistic, headline-friendly “numbers-go-up” narrative and toward a more thoughtful understanding of what the benchmark truly reflects.

Context pollution

When the SimpleQA benchmark was first introduced, it offered valuable evaluation metrics for assessing factual accuracy. However, over time, a growing number of web pages containing benchmark queries and their corresponding answers have surfaced online. These pages have likely been incorporated into the training data of newer models and are also indexed by search engines—either through natural discovery or targeted indexing. This creates a context pollution scenario, where retrieved answers may reflect memorization or direct exposure rather than true reasoning or retrieval quality, leading to inflated benchmark scores.

To ensure the integrity of the results presented in Table 1, we implemented filtering to remove potentially contaminated sources. Specifically, we excluded:

- All URLs beginning with https://huggingface.co/datasets

- Any URL containing the string “simpleqa”

- Any page containing the normalized “simpleqa” text

This filtering process removed a significant number of URLs but yielded more reliable and meaningful scores. Based on our evaluation of other API providers’ endpoints, similar filtering practices are not consistently applied across the industry, which makes it challenging to fairly interpret and compare publicly reported results. The Search API does not exclude these URLs from search results by default.

Ambiguity

The questions in the SimpleQA test set exhibit varying degrees of answer ambiguity. The LLM judge (per the testing setup) marked 292 out of 4,332 questions as incorrect. To assess the accuracy of this evaluation, Brave’s human assessment team manually reviewed each of these 292 cases. Their analysis found that in 167 instances, the LLM judge had either:

- Incorrectly rejected valid answers, or

- Relied on expected answers from SimpleQA that themselves contained factual inaccuracies or oversimplifications.

From the perspective of Brave’s new grounding API, this behavior is a feature—not a bug. Our goal is to surface relevant nuance, multiple perspectives, and potential contradictions related to a given query. In contrast, SimpleQA is designed to prefer concise, single-fact answers, often at the expense of depth and contextual accuracy.

| Brave Answer | Expected SimpleQA answer |

|---|---|

| > What is the maximum depth of the Mediterranean Sea in meters? The maximum depth of the Mediterranean Sea is most commonly reported as 5,267 meters (17,280 feet) at the Calypso Deep in the Hellenic Trench, Ionian Sea, based on widely cited sources such as ScienceDirect, World Atlas, and sizepedia.org. However, a 2020 expedition using direct measurement sensors recorded a depth of 5,109 meters (16,762 feet) ±1 m, and a 2025 University of Barcelona study noted 5,112 meters. While the historical value (5,267 m) remains the dominant figure in recent sources, the discrepancy suggests potential updates in measurement methodologies or ongoing scientific debate. The Calypso Deep’s location is consistently confirmed in the Hellenic Trench, approximately 62.6 km southwest of Pylos, Greece. |

5109 m |

Table 2: Example of an ambiguous case where the LLM Judge flagged the answer as wrong.

“Tuning” the scores

Benchmark scores are influenced by several factors that can meaningfully affect reported results. Two key considerations are run-to-run variance and the choice of evaluation metric.

Variance between test runs

F1 scores can fluctuate across test runs due to the non-deterministic nature of LLMs. Variations of up to 0.2% are common, stemming from randomness in both answer generation and the judging process. While such differences may seem minor, they can materially impact comparisons between systems, especially at the high end of performance. We disclose this variability to ensure transparency and to help contextualize our results.

Metric selection

Benchmark results typically include three metrics: F1-score, Accuracy, and Accuracy Given Attempted (Acc*). Organizations may choose to emphasize whichever metric portrays their system most favorably. For example, some systems are configured to skip difficult questions and report results on the filtered subset (Acc*), rather than attempting every question. For this evaluation, Brave configured the LLM to attempt every question and has published all three metrics in Table 1, offering a complete and unbiased view of performance.

Conclusion

As AI continues to permeate search, productivity, and creative workflows, grounding with high-quality, independent sources is no longer optional. It’s become essential for building trust with and delivering value to end users. With the introduction of AI Grounding with the Brave Search API, we’ve set a new standard for best-in-class AI search to power the world-class LLM applications.

The consistent growth of Brave Search among end users is evidence of our quality, and each week more than 4,000 developers sign up for the Brave Search API to bring that same level of quality to their own products. To get started with AI Grounding, sign up for the Brave Search API or contact us about custom plans for large enterprises.

About Brave, Brave Search, and the Brave Search API

Brave is a privacy-preserving Web browser with nearly 94 million monthly active users worldwide. Brave Search is the default search engine in Brave, and is also available in any browser at search.brave.com. Brave Search is the third-largest global independent search engine, with an index of over 30 billion webpages and handling over 1.5 billion monthly queries.

The Brave Search API helps organizations supply their AI LLMs with real-time data, power agentic search, train foundation models, and create search-enabled software, and is for building applications that benefit from having access to the Web, going beyond the static knowledge of AI models.

Update: September 1st, 2025

We have released a new model update, which is a considerable improvement over the previous.

Single Search sees the most substantial gains from this enhancement. Users can now rely on our fastest model to deliver high-quality, grounded answers with even greater confidence and speed.

| Model | Previous Performance | Current Performance | Change (p.p) |

|---|---|---|---|

| AI Grounding (no reasoning) |

|

|

|

| AI Grounding: Research (with reasoning) |

|

|

|

Table 3: Single Search

Research mode has also received major improvements. The updated model matches the performance of our previous best results while operating with a reduced context window, leading to faster response times and lower costs.