AI browsing in Brave Nightly now available for early testing

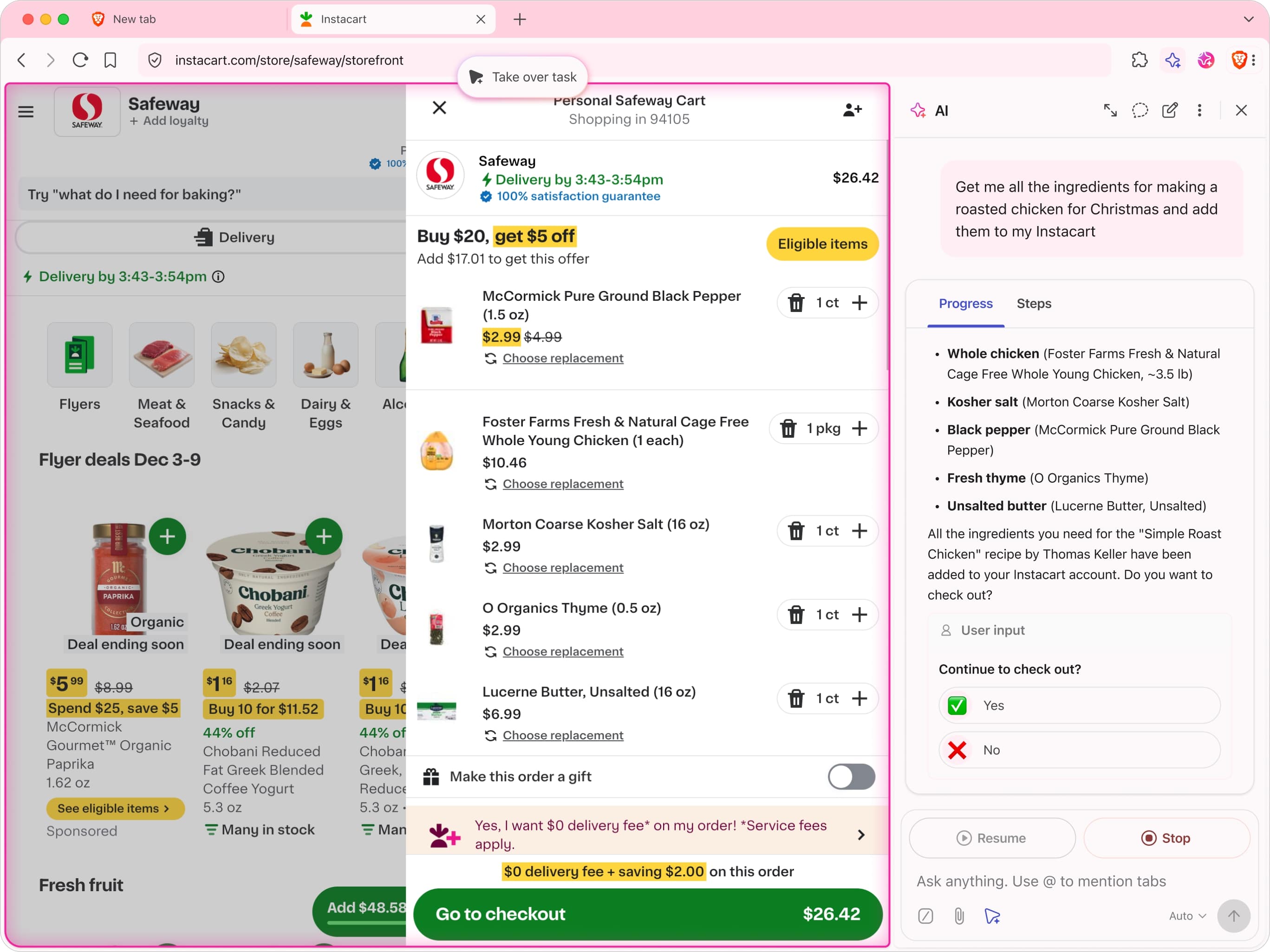

Today, we’re announcing the availability of Brave’s new AI browsing feature in Brave Nightly (our testing and development build channel) for early experimentation and user feedback. When ready for general release, this agentic experience aims to turn the browser into a truly smart partner, automating tasks and helping people accomplish more.

However, agentic browsing is also inherently dangerous. Giving an AI control of your browsing experience could expose personal data, or allow agentic AI to take unintended actions. Security measures are tricky to get right and disastrous when they fail, as we have shown through numerous vulnerabilities we found and responsibly disclosed over the last few months. Indirect prompt injections are a systemic challenge facing the entire category of AI-powered browsers.

For this reason, we’ve chosen a careful approach to releasing AI browsing in the Brave browser and soliciting input from security researchers. We are offering AI browsing behind an opt-in feature flag in the Nightly version of Brave, which is the browser build we use for testing and development. We’ll continue to build upon our defenses over time with feedback from the public. At present, these safeguards include:

- AI browsing is currently available only in the Nightly channel behind an opt-in feature flag, via Brave’s integrated AI assistant Leo.

- AI browsing happens only in an isolated browsing profile, keeping your regular browsing data safe.

- AI browsing has restrictions and controls built into the browser.

- AI browsing uses reasoning-based defenses as an additional guardrail against malicious websites.

- The AI browsing experience has to be manually invoked, ensuring that users retain complete control over their browsing experience.

- Like all AI features in Brave, AI browsing is completely optional, off by default.

While these mitigations help significantly, users (even early testers) should know that such safeguards do not eliminate risks such as prompt injection. As an open-source browser, we welcome bug reports and feature requests on GitHub. We also encourage anyone who discovers a security issue in AI browsing to report that issue to our bug bounty program. In this early release phase, valid and in-scope security issues in AI browsing will receive double our usual reward amounts (see our HackerOne page for more details).

Despite its risks, AI browsing shows great promise. As we outlined in our 2025 browser AI roadmap update, we’re confident that a smart and personalized collaborator that adapts to your needs can ultimately transform the way you browse the Web. For instance, it could research topics by visiting and analyzing multiple websites, compare products across different shopping sites, check for valid promo codes before purchases and summarize news the way you like it. The security and privacy challenges are novel and significant, but given the potential for AI browsing to become a widely-adopted browser feature, we first need to get feedback from early testers, and iterate towards a solution that is safe for all users (See “How to test AI browsing” section below).

Preventing the AI agent from taking unwanted actions

At root, the security and privacy risks of agentic browsing have to do with alignment: you want to prevent the AI from taking unintended actions. This is a hard security and privacy problem. Given how open-ended inputs can be for AI browser agents, and given the browser’s level of access to the Web, a given prompt could apply to basically any request on almost any website.

Adding to this challenge, reasoning models are probabilistic: the same request of the AI model could produce different results at different times, so the output space of possible AI browser agent actions is hard to limit.

It would be easy if security engineers could simply tell an LLM (large language model) never to do “bad things.” Unfortunately, given the browser’s level of access and the reasoning capabilities of today’s models, it would be naive to assume that this strategy would work in isolation. It’s still relatively easy to subvert models into performing risky actions, and we want to avoid a situation where we’re constantly chasing new security vulnerabilities (“whack-a-mole”).

Additionally, while we’re concerned about indirect prompt injections on websites (where an attacker embeds malicious instructions in Web content through various methods), we’re also aware that the security threat model with agentic browsing doesn’t always need an attacker: in some cases, the AI could simply misinterpret user commands. To put it simply, the two risks we want to protect Brave users from are:

- Malicious actors who want to do prompt injection on a website

- The model getting confused and taking an action that’s harmful to the user

We believe any agentic browsing experience should have robust protections against these two threats.

Defenses against security threats

Given the potential for harm, the protections outlined below are not an exhaustive list, but what we consider minimally necessary before rolling out an agentic experience even for early user testing.

Isolated storage for AI browsing

Many Brave users will likely be logged into sensitive websites (e.g., their banking website or email account) in their main Brave browser profile. With AI browsing, we need to prevent possible attackers from gaining access to those logged-in services. Brave’s AI browsing therefore keeps its storage separate from your regular profile: cookies, logged-in state, caches, and other site data do not cross profiles. This limits harm if defenses fail and a model is manipulated into a dangerous action.

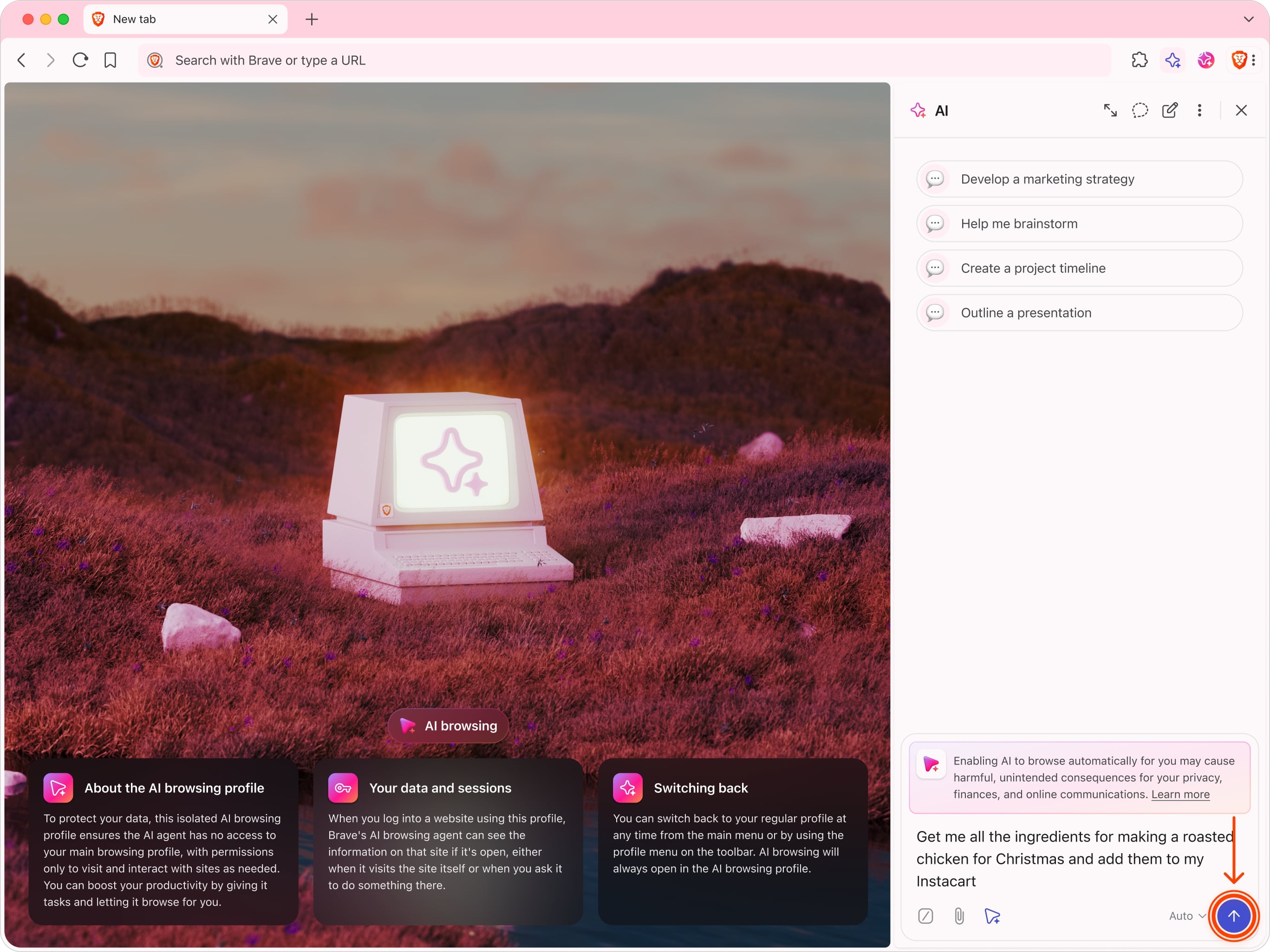

Given the inherent risk of agentic browsing, the user must manually invoke AI browsing. When you enable AI browsing, Brave creates a brand-new browser profile. This new profile isolates all data available to the AI agent.

For now, we believe that this approach of completely isolating your browsing data is the safest approach to AI browsing.

Model-based protections

We also use a second model to check the work of the AI agent’s model (the task model). This “alignment checker” serves as a guardrail: it receives the system prompt, the user prompt, and the task model’s response, and then checks if the task model’s instructions match the user’s intention. This checker does not directly receive raw website content—by firewalling it from untrusted website input, we can reduce (but not eliminate) the risk of subversion by page-level prompt injection. We also provide security-aware system instructions: a structured prompt authored by us that encodes policy-based rules we will refine over time. In addition, we use models trained to mitigate prompt injections, such as Claude Sonnet.

It’s worth noting that guardrails are not proof of safety—they can help against, but not eliminate, risk. LLMs are non-deterministic and fallible, and the output of the task model can be subverted by untrusted page content to specifically attack the alignment checker model.

Browser controls and UX

A core goal of privacy engineering is to reduce user surprise. To this end, AI browsing in Brave must be deliberately invoked by the user. While the regular AI-assistant Leo can now suggest browsing actions based on the user’s prompt, it can never on its own initiate AI browsing without consent. And, similar to how Private Windows and Private Windows with Tor in Brave browser are styled differently, the AI browsing profile is styled differently from Leo, and has distinct action cues. This helps make it obvious to users that they’re in AI browsing mode.

Users of AI browsing in Brave can inspect and pause sessions, and the AI cannot by itself delete session logs. All browsing on your behalf happens in an open tab, rather than being hidden in a sidebar. And, as always, the user can delete all data from the agentic session at any time.

Safeguards include:

- AI browsing does not have access to internal pages (such as

brave://settings), non-HTTPS pages, extension pages on the Web Store, or websites flagged by Safe Browsing. - Actions detected as misaligned by our reasoning-model-based protections (as explained above) will trigger a warning for the user and require explicit permission.

- For both agentic and in-browser assistant use cases, users clearly see any memory proposed for saving, which they can then undo (this prevents “saved” prompt injection attacks).

Unparalleled privacy

As a privacy-first company, we enforce our strict no-logs, no-retention data privacy policy, maintaining Brave’s commitment to protecting your data.

This is worth emphasizing. AI browsing in Brave never trains on your data, unlike other agentic browsers.

As always, even while in AI browsing, you get all of the Brave browser’s best-in-class privacy protections including blocking of invasive ads and trackers.

A note on permission prompting

We are not using a per-site permission prompting approach (example: “allow agentic actions on example.com?”) for AI browsing. Our browser development experience shows that repeated, low-signal security prompts with incomplete contexts train users to ignore warnings, which leads to diminished protection. We want to be careful when asking users to make a security-critical decision and will reserve this for specific actions that are detected as potentially risky by our model-based protections. We may reevaluate our per-site permissioning approach later, pending feedback from users and researchers, and insights into how users are using AI in the browser.

AI browsing never has access to internal pages (such as brave://settings or brave://settings/privacy), non-HTTPS pages, Chrome Web Store, or websites flagged by Safe Browsing.

The future of AI browsing in Brave

AI browsing is powerful, and we’re excited to see it live for user testing in Nightly. At the same time, we want to protect the user and uphold Brave’s core promise of privacy and security. This is a work in progress; AI browsing is not an experience that should be rushed out the door at the expense of users’ privacy and security. We expect to learn much from user feedback, and to make improvements and contributions that will also benefit the entire agentic browser space. We’re building the agentic experience with transparency, restraint, and respect for user intent. Ultimately, AI is just one tool toward Brave’s original mission: to empower and protect people online. That’s the line we’re drawing, and we’re looking forward to feedback from users and researchers to help us walk that line well.

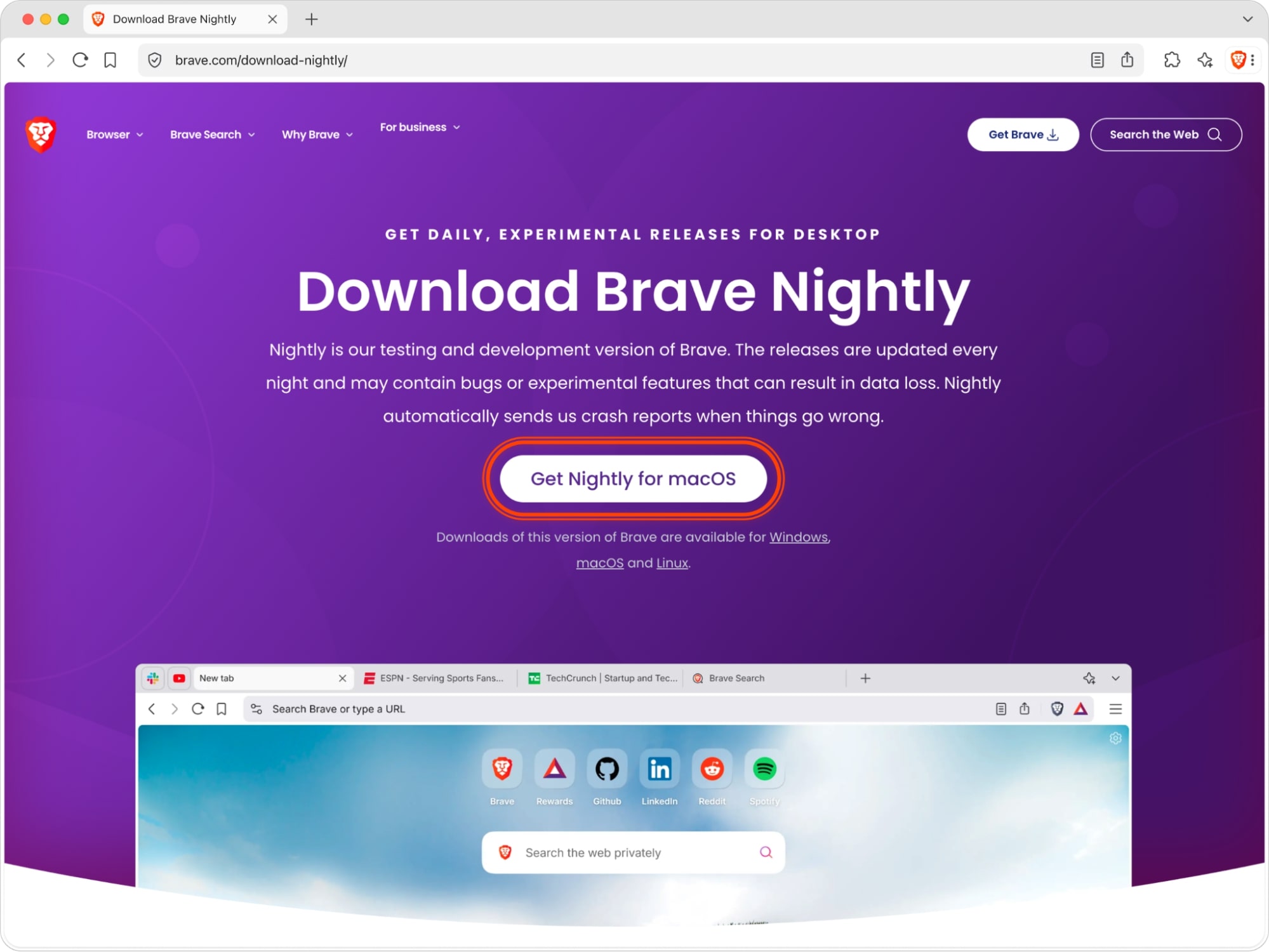

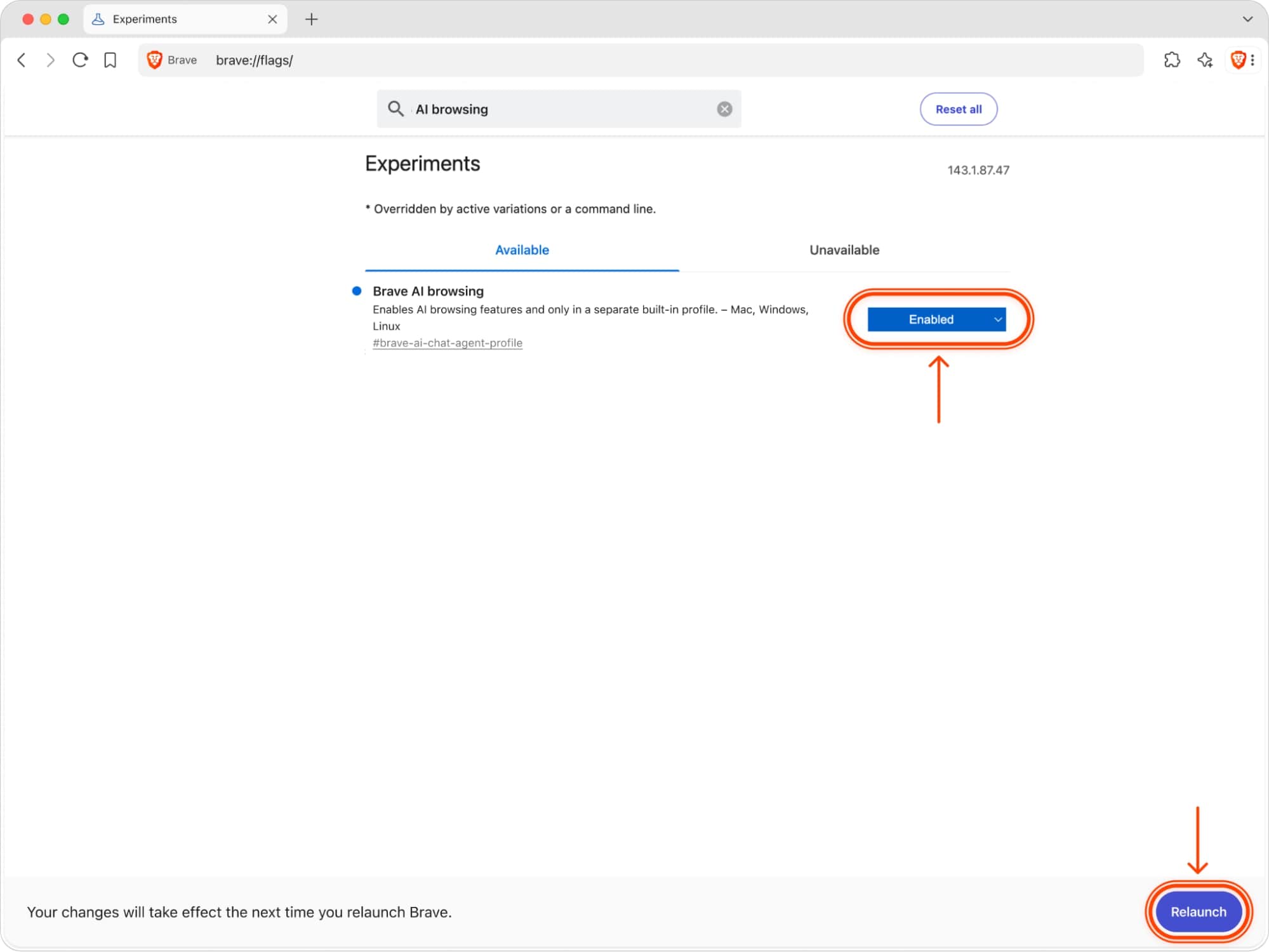

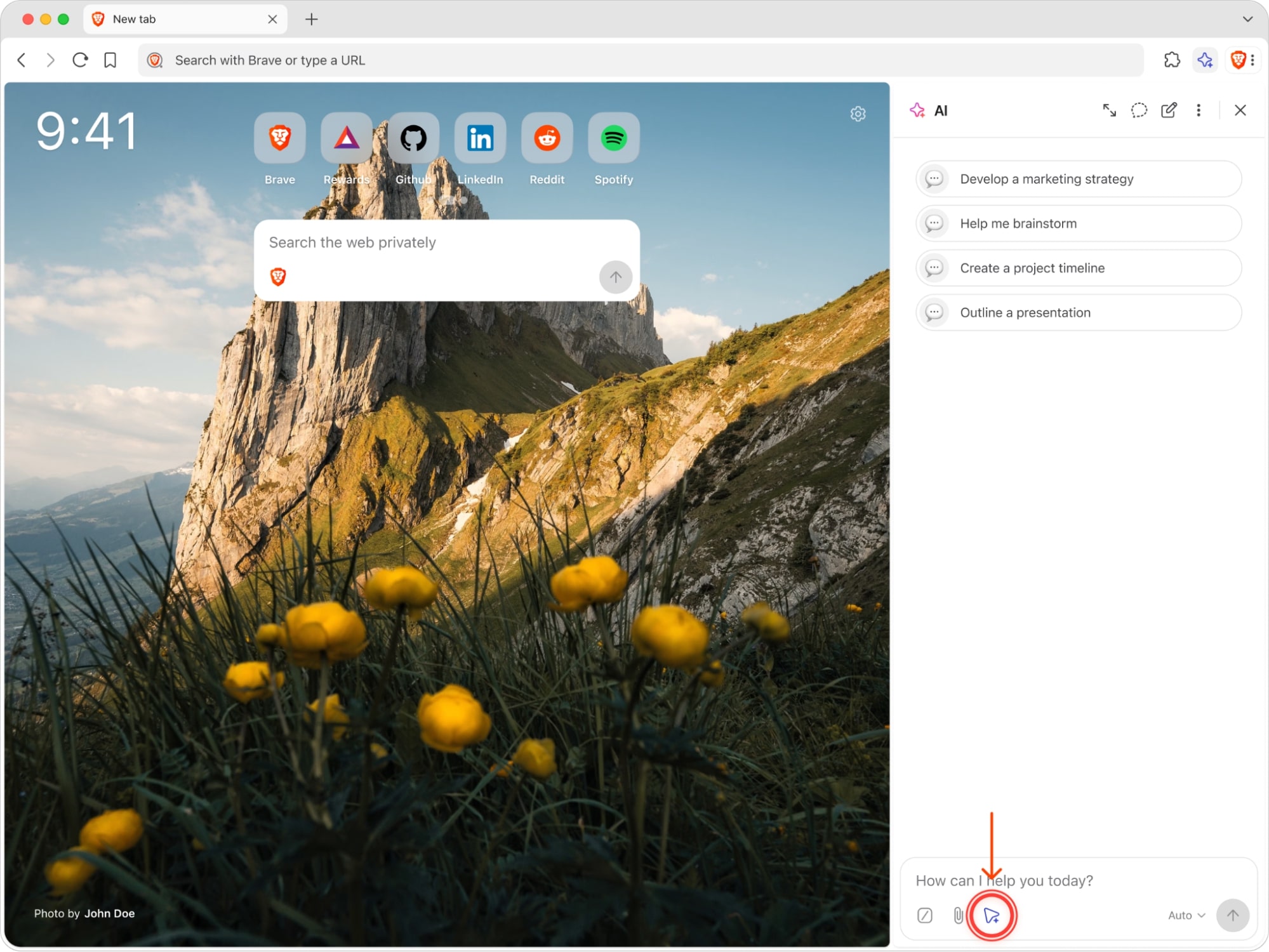

How to test AI browsing

For those who wish to test it, AI browsing is available in Brave Nightly via the “Brave’s AI browsing” flag in brave://flags. A feature flag is essentially a switch in a secondary settings page where advanced users can enable or disable experimental features. Testers can enable AI browsing within Leo, the Brave browser’s integrated AI assistant, via the button in the message input box. Like all AI features in Brave, AI browsing is completely optional, and Leo can be disabled by users.

More details about AI browsing can be found here. We welcome tester feedback and requests here, and bug reports here.