Bring Your Own Model (BYOM): using Brave Leo with your own LLMs

Note: As of August 22, 2024, BYOM is now in general release and available to all desktop users (with browser update version 1.69 or higher).

AI assistants have become a common part of modern Web browsers. Brave has Leo, Edge has Copilot, Opera has Aria, Arc has Max… This new wave of AI integration has raised many questions among developers and users alike. What’s the best way to interact with an AI assistant within the browser? What unique opportunities arise when AI is brought directly into the browsing experience? And, perhaps most importantly: How can we effectively safeguard a user’s data, and allow them to configure their own AI models?

In our Leo roadmap–where we outlined how Brave is thinking about Leo and the future of AI integrations within the browser (aka Browser AI)—we pointed out the need to allow users to run models locally, and to configure their own models. Running models locally (i.e. directly on device) ensures that data like conversations, webpages, and writing prompts exchanged with the AI assistant never leave the user’s device. This approach will also open more opportunities for assisting users with their local data while safeguarding end user privacy. Additionally, by allowing users to configure their own AI models, whether running locally or hosted remotely, Brave empowers users to tailor the AI’s behavior, output, and capabilities to their specific needs and preferences.

Your model, your rules

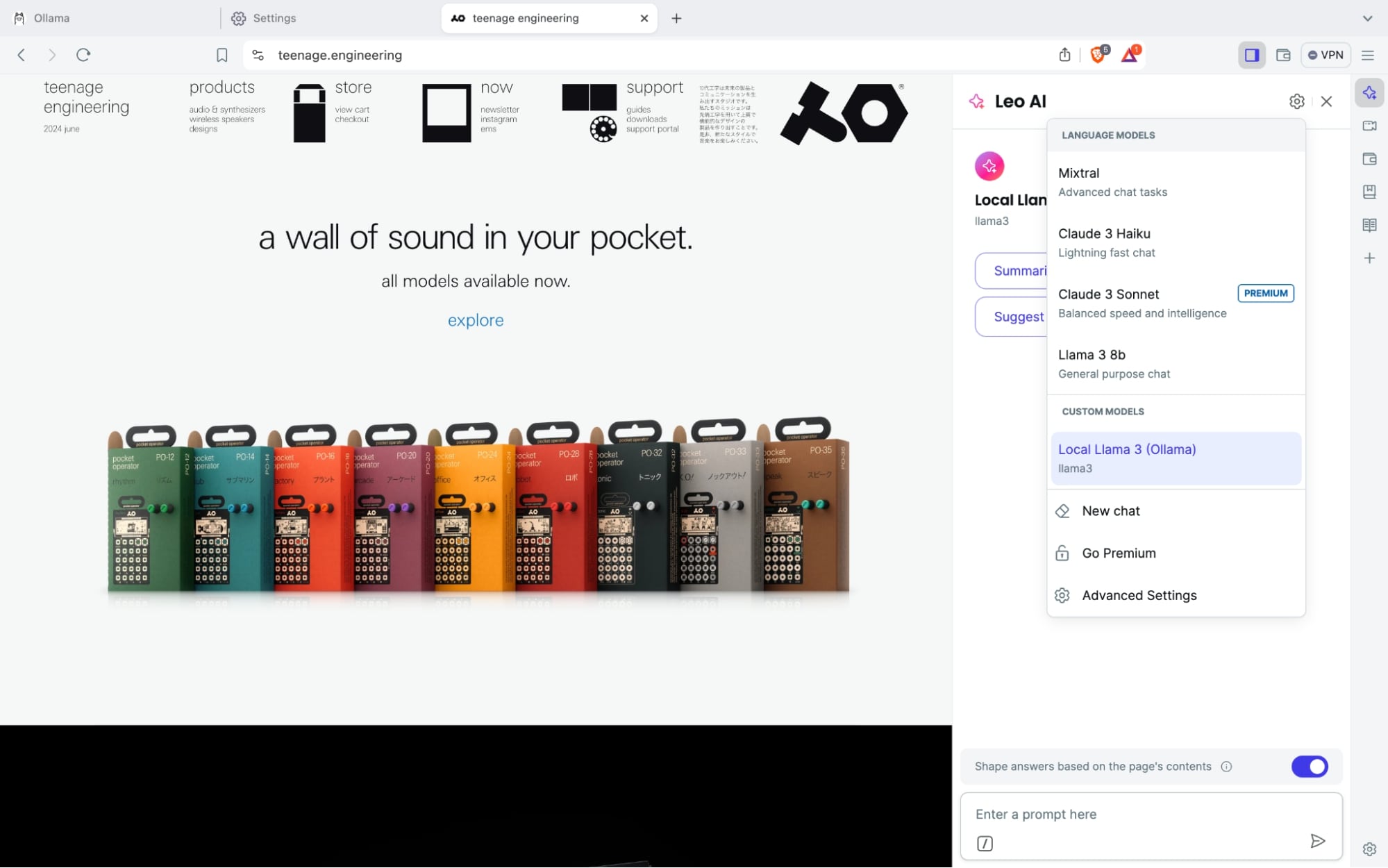

Our first step towards that promise is called “bring your own model” (or “BYOM” for short). This optional new way of using Leo, Brave’s native browser AI, allows users to link it directly with their own AI models. BYOM allows users to run Leo with local models running safely and privately on their own machines. Users will be able to chat or ask questions on webpages (and docs and PDFs) to Leo without their content ever having to leave the device.

BYOM isn’t limited to just local models, either–BYOM users will also have the flexibility to connect Leo to remote models running on their own servers, or that are run by third-parties (e.g. ChatGPT). This opens new possibilities for users and organizations to leverage proprietary or custom models while still benefiting from the convenience of querying the AI directly within the browser.

With BYOM, the requests are made directly from the user’s device to the specified model endpoint, bypassing Brave entirely. Brave does not act as an intermediary and has absolutely no access to—or visibility into—the traffic between the user and the model. This direct connection ensures end-to-end control for the user.

BYOM is currently available on the Brave Nightly channel for developers and testers, and is targeted to launch in full release later this summer. Note that BYOM is initially for Desktop users only.

Getting started Using Local Models with BYOM in Leo

No special technical knowledge or hardware is required to try BYOM. The local LLMs landscape has evolved so fast that it is now possible to run performant local models on-device in just a few simple steps.

For example, consider a hobbyist-favorite framework for running local LLMs: Ollama. The Ollama framework supports an extensive list of local models that range from 829MB to 40GB in size.

You can get started using a local LLM in Brave Leo in two easy steps:

Step 1: Download and install Ollama

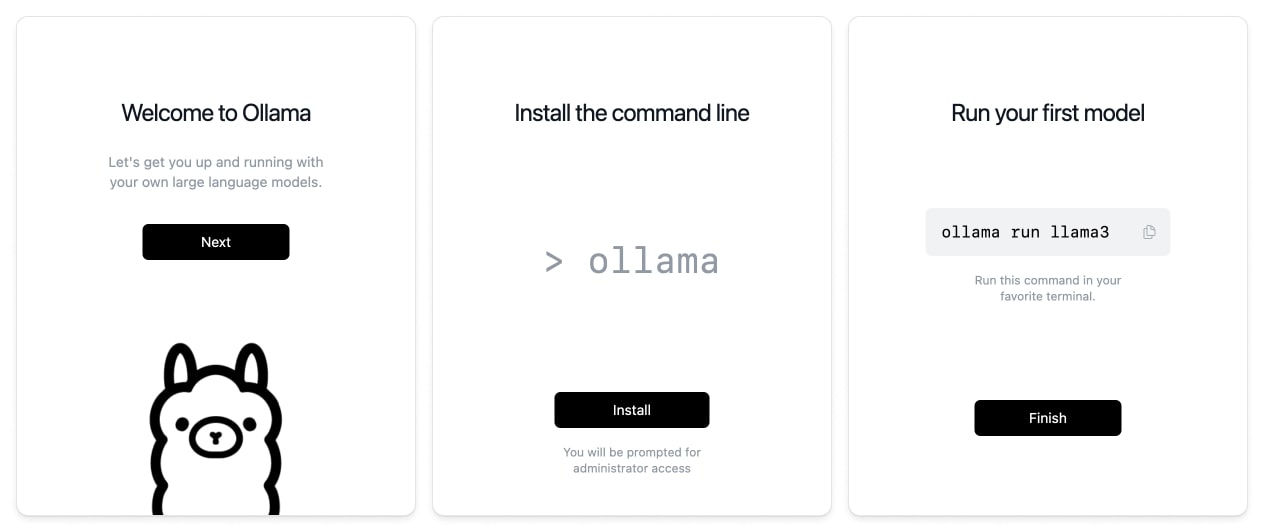

First, visit https://ollama.com/download to download Ollama. Select your platform and continue with the download. Once you download and unzip the file, make sure to move the application somewhere you can easily access, such as your computer’s desktop or Application folder. You will have to start the serving framework whenever you want to use the local model.

Next, click the application and wait for it to open, and complete the install steps.

After you complete these steps and click Finish, you’ll be able to run Meta’s Llama 3 model on your machine just by opening your terminal and typing:

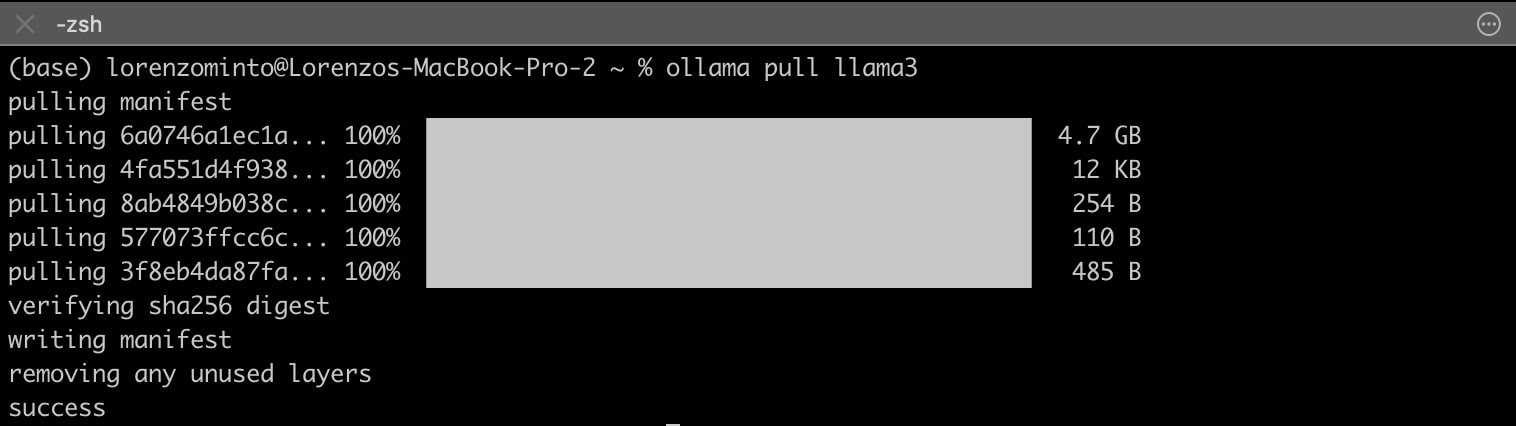

ollama pull llama3You should see that the model manifest and the model are being downloaded (note this step may take a while to complete, depending on the file size and your connection speed). Once the model has been pulled successfully, you can close your terminal.

Llama 3, at 4.7GB for an 8B parameter model, strikes a good balance between quality and performance, and can be easily run on a laptop with at least 8GB of RAM. Other models we found suitable to be run locally are Mistral 7B (by Mistral AI) and Phi 3 Mini (by Microsoft). See the full list of models supported by Ollama.

Now that the model is running locally on your device, no data is being transmitted to any third party. Your data remains yours.

Step 2: Plug your model into Leo

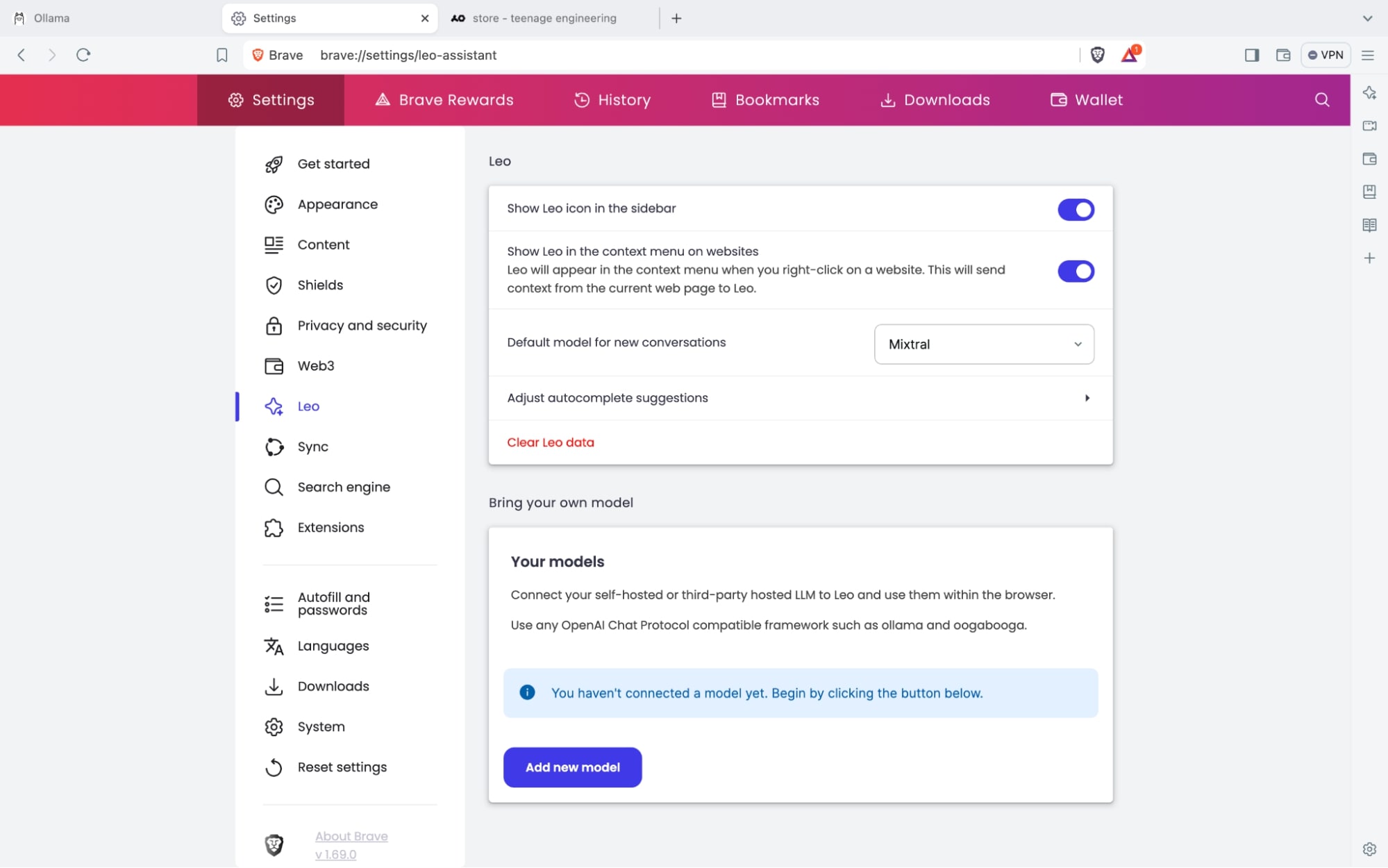

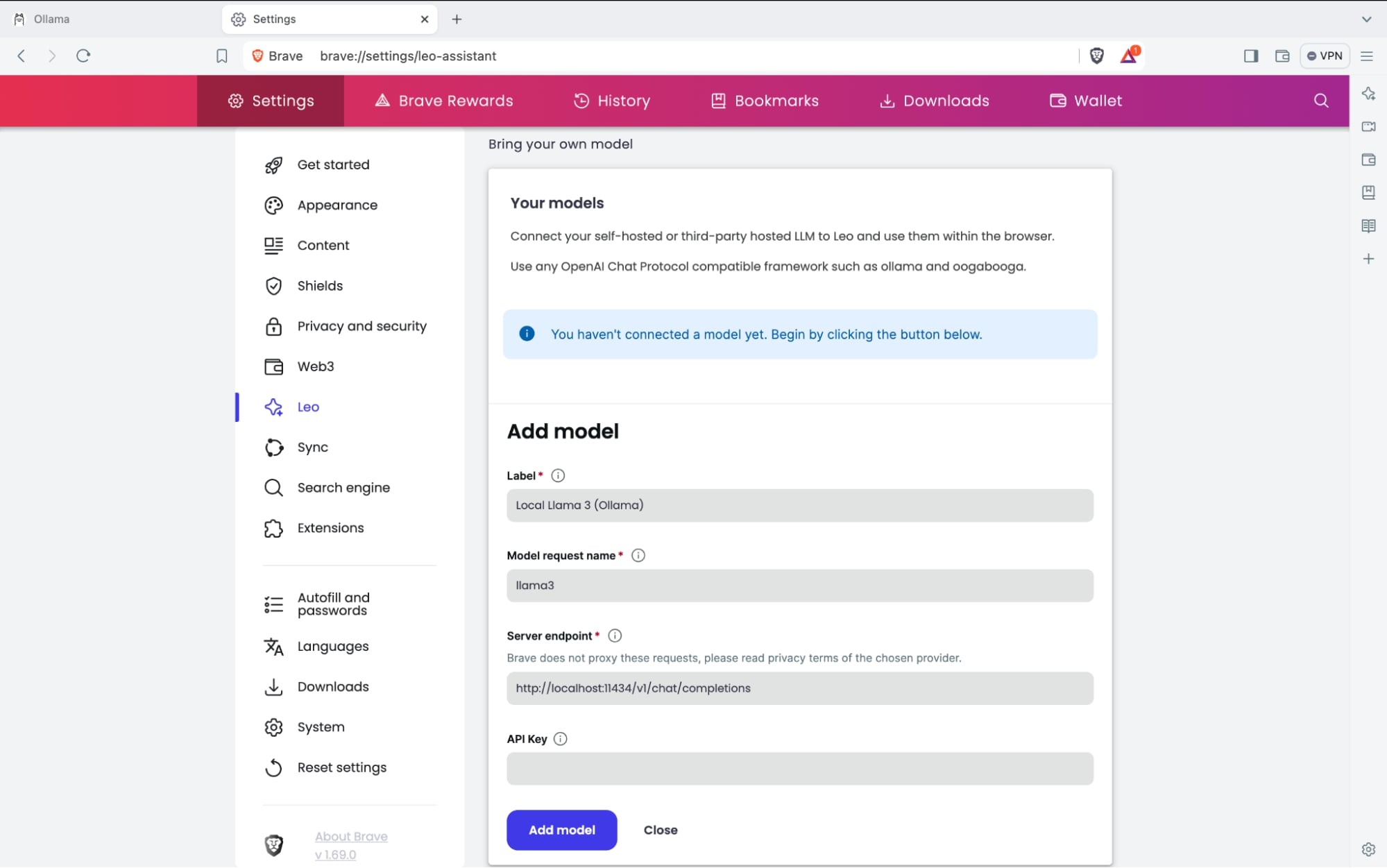

To add your own local model to Leo, open the Brave browser and visit Settings, and then Leo. Scroll to the Bring your own model section and click Add new model.

You’ll then be brought to a new interface where you can add the details of your model. The required fields are the following:

-

Label: The name of the model as it will appear in the model selection menu.

-

Model request name: The name of the model as it should appear in the request to the serving framework, e.g. llama-3 (note that if this name doesn’t match exactly what is expected by the serving framework, the integration will not work).

-

Server endpoint: The url where your serving framework is “listening” for requests. If you’re not sure, check the serving framework documentation (For Ollama, this is always

http://localhost:11434/v1/chat/completions). -

API Key: third party frameworks may require authentication credentials, such as an API key or an access token. These will be added to the request header.

Click Add model; your local model should now appear in the Leo model menu. Simply select it to use it to power your browser AI.

Note that while we’ve used Ollama in this section, as we think it’s one of the most user-friendly frameworks to set up and run local models, the BYOM feature can be used with any local serving framework with an exposed endpoint and that conforms to the OpenAI chat protocol.

Using Remote Models (ChatGPT) with BYOM in Leo

To connect Leo to a remote model or a third party API, such as OpenAI API, follow these steps:

-

Enter

https://api.openai.com/v1/chat/completionsas the endpoint, and add the name of your desired model (e.g.gpt-4osee model list) in theModel request namefield. -

Enter your private API key in the

Authentication Credentialsfield to authenticate your requests to the API. The key is then stored safely in your browser.

Note that—at the time of writing—Brave Leo only supports textual input and outputs; outputs of any other modality will not be processed. You can now use Brave Leo with ChatGPT through your OpenAI API account.

If you’re a Brave Nightly user, please give BYOM a try, and let us know what you think on X @BraveNightly or at https://community.brave.app/!