Digital Sovereignty & Maintaining Our Connection with Humanity

[00:00:00] Luke: From

[00:00:02] Anupam: privacy concerns to limitless potential, AI is rapidly impacting our evolving society. In this new season of the Brave Technologist

[00:00:10] Luke: podcast, we’re demystifying artificial intelligence, challenging the status quo, and empowering

[00:00:15] Anupam: everyday people to embrace the digital revolution. I’m your host, Luke Malks, VP of Business Operations

[00:00:20] Luke: at Brave Software,

[00:00:21] Anupam: makers of the privacy respecting Brave browser.

[00:00:24] And search engine now powering AI with the brave

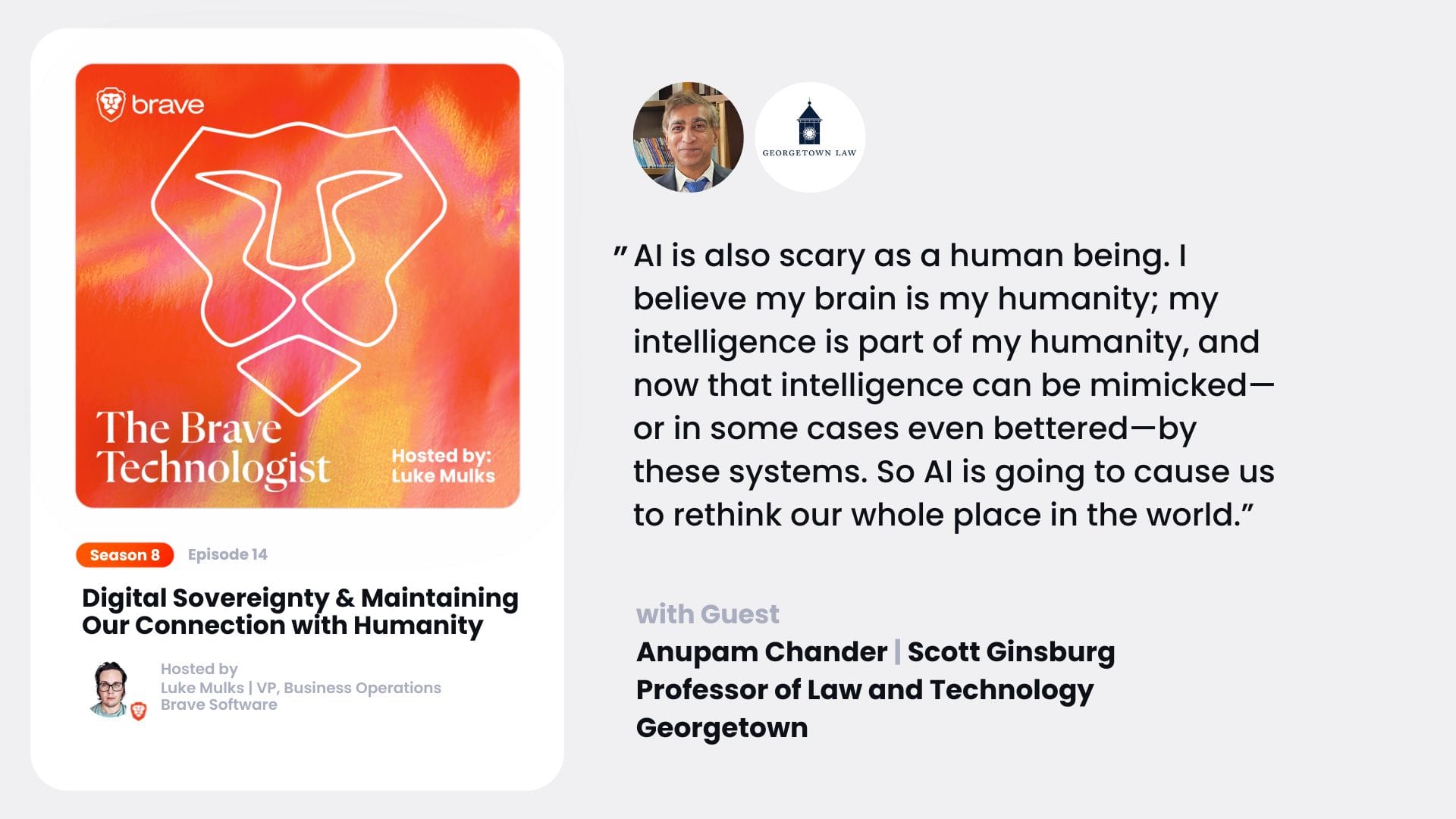

[00:00:26] Luke: search API. You’re listening to a new episode of the brave technologist, and this one features Anupam Chander. He’s a professor of law and technology at Georgetown university and the author of the electronic silk road published by Yale university press.

[00:00:40] He’s practiced law in New York and Hong Kong, and it’s a visiting scholar at the institute for rebooting social media at Harvard university. In this episode, we discussed. Digital sovereignty, what digital sovereignty is, what it means and how AI is impacting digital sovereignty as a concept. We discussed Section 230, what it [00:01:00] is, and why it’s been important with regard to people on the Internet.

[00:01:04] And we’ve also discussed a lot around regulation and how regulators are having to balance new technologies and impact that judges, regulators, and the courtroom have had on technology in general. And now for this week’s episode of the brave technologist hi welcome to the brave technologist podcast

[00:01:25] Anupam: how are you doing today i’m doing great thanks luke yeah thanks for

[00:01:29] Luke: joining us why don’t we start off by kind of giving the audience a little bit of background on how you ended up doing what you’re doing and if there’s anything kind of in your background that specialize your direction and where you landed now and what you’re doing now.

[00:01:41] Anupam: So I grew up in a small town in Oxford, Ohio, and one of the great virtues of living in Oxford, Ohio was that there was a public university, which is what drew my family there in the first place. And the public university had to make some of its services, its facilities available to the public. And so I I got [00:02:00] access to Ben mini computers in the late 1970s when I was in K through six, so because they had to make them available to the public, they didn’t like that actually.

[00:02:11] I mean, the people running didn’t like these kids running around their computer centers, but they had to offer us accounts. And so I learned computers. Entirely self taught, you know, obviously we didn’t have computers in school and things like that. So I learned how to write computer programs on an HP 3000 computer.

[00:02:31] We didn’t have to use earlier, more rudimentary systems, but still a kind of terminal system connected to what was then called a mini computer. And so that’s what led me into this whole area. I spent a lot of time growing up programming, then, you know, life took me different ways. I went off to law school, I then became a law professor.

[00:02:50] And then when I became a law professor, I worked as a lawyer as well, doing international finance. And then when I became a law professor, I decided to return to my love [00:03:00] with technology. And so I’ve been teaching technology law since the year 2000. It is funny, too.

[00:03:05] Luke: Like, I’ve seen this, like, kind of in my own career.

[00:03:07] We’re really talented people who might be, like, super technical, and then they kind of drift into law, or vice versa, you know? Like, it seems like there’s, like, really this, the fundamentals around the legal systems and then computer systems, you know, because you’ve got this human in between both of them, right?

[00:03:21] Like, it seems, like, really complementary, and it’s kind of, like, one side of the journey kind of informs the other, and they both kind of evolve together. It’s super fascinating stuff. Why is what you’re doing now, in your point of view, like, really important around the world around you and around tech?

[00:03:37] Anupam: So, you know, I’ve been teaching this internet law, like I said, since the year 2000, and it was only after teaching it for a dozen years that I finally understood it.

[00:03:46] And so, let me try to explain. I finally understood the big question of why the internet didn’t make the whole world equal. The reality, of course, is that the internet allows anyone, anywhere, [00:04:00] technically, to offer their services to the world. And so you can set up your next Facebook, might come from either from Kalamazoo, Michigan, or from Kenya.

[00:04:09] It shouldn’t matter, but the reality is it does. And the question is, why, why does it matter so much where you are, and in particular, why did the United States beat everyone else at the internet? For the last decade, I’ve been working on that question. Why did the United States produce all these incredible companies that have dominated the world?

[00:04:32] Large, and even On the smaller scale, just a huge plethora of companies where the rest of the world has kind of lagged. China is, of course, its own special case, and we can discuss that if you’d like. And here, I decided that it was actually internet law that had been made a Big, big difference. I mean, there were a number of things going for the United States, a large single market.

[00:04:56] So that’s obviously very useful. You can amortize your [00:05:00] costs over a bigger population right from the get go. You had obviously well developed capital markets

[00:05:06] Anupam: generally in the United States for finance, whether venture capital or later stage public companies, et cetera. But you also needed a new kind of law for dealing with this new thing of the internet.

[00:05:22] The U. S. actually created a set of laws that allowed the United States to become a hotbed of these kinds of new enterprises, for good and bad. That’s a descriptive story. You can take a normative story out of that if you’d like. But there’s a descriptive story of what happened, which is the first part of the enterprise.

[00:05:40] So I wrote a paper and I posted a paper in 2013 called How Law Made Silicon Valley. And that paper For me was really an epiphany of what I’ve been teaching over the last decade. All of that kind of fit together into what essentially created a business model for the internet, which may not have existed in [00:06:00] the absence of law.

[00:06:01] Yeah.

[00:06:01] Luke: It’s so interesting too. I mean, even now, like you’ve got generations now that have. Not grown up without the internet and it’s become such a staple, but still there are areas where if you look at like cryptocurrency, for example, or even AI, where a technology can be used for financial purpose, but it also can be used as part of a global network.

[00:06:21] And so you’ve got different regulatory agencies that have a different hand in the pie, you know, as far as like a regulation and governance and that’s just in the U S and these global networks. Are global, right? Like, and that’s where it’s kind of a lot of this is like, how do you keep that global access while having to navigate these regional or nation state, especially in a powerful nation state like the U.

[00:06:44] S. Where, you know, so much of the business of the Internet is based in the U. S. And so many users and that leading edge, right? But then you’ve got things like Europe and how privacy policies are influenced by the European governments. I look at it, I think, thank you for making some kind of a definition.

[00:06:59] That’s not the [00:07:00] company’s telling us and other angles. It’s like, man, this, some of these things are kind of a hassle too. Right. Yeah. There’s a really interesting angle that you bring on this because it just seems like more and more of that human element kind of gets brought into this and where you’re standing in the world.

[00:07:13] Does matter. I mean, like a lot. And how can we kind of democratize these things more broadly for some of those advantages while still keeping the thing what it is, right? Like, without having to be like, Oh, well, this is a Western network whatever. I think there’s a lot of balance there. Let’s drill down a little bit, though, into something people are talking a lot about around digital sovereignty.

[00:07:35] Yeah. Yeah. Can you expand on what digital sovereignty is a bit and kind of how that relates to AI? Because we’re seeing thrown around quite a bit.

[00:07:43] Anupam: Sure. So digital sovereignty or it comes in a variety of flavors. And so I think the way I’ve described it is essentially who controls the internet. And so the various things that we do on the internet, who has the regulatory power over what [00:08:00] we do on the internet.

[00:08:01] Governments, of course, want control over the internet, and they should have control over the internet. So I think actually, so many people are allergic to the idea of digital sovereignty. I’ve always thought it made sense. We can’t create a kind of lawless spaces. You know, as a lawyer, I believe in law, and so we can’t just simply say, no, governments have no right here, and you have no power over us, because we’re now, you In the ether, that never made sense from the start because we know how badly that all ends up, you know, when people decide to forget about governments, whether you can secede and form a better country, you know, etc.

[00:08:42] those are important questions. Secession typically is a very difficult concept in international law. International law typically frowns on secession, etc. But digital sovereignty is. Overall, the concept of who controls the Internet and what we do via the Internet,

[00:08:58] Luke: maybe we can drill [00:09:00] into that a little bit just so people can kind of get a better sense of more explicitly, like what you mean when you say the government controls the Internet, right?

[00:09:09] Are we talking about access or are we talking about types of content how do we frame that?

[00:09:15] Anupam: A classic case here is a case involving Yahoo and France. This is for the old timers, young people wanting to remember a website called Yahoo. Yahoo was the kind of first big search engine, a very popular search engine.

[00:09:28] It was a human curated search engine. The other thing that Yahoo did was it offered various services. It had a portal basically system and it offered various services such as auctions or even web pages that you could design. And some people use those auctions to sell Nazi memorabilia, or Mein Kampf, or they use those webpages to post the full text of Mein Kampf.

[00:09:51] And in France, all that activity is illegal under their hate speech code. And [00:10:00] so two French leagues concerned about anti Semitism brought suit against Yahoo in France. Yahoo said, Hey, we’re an American company. You don’t have any sovereignty over us. If anyone has sovereignty, it’s the United States and it’s, this is legal in the United States.

[00:10:18] We’re allowed to say that whatever we want. And the French judge appointed a panel of experts. One of those experts was an American and it was a real expert. Someone we now recognize as a father of the internet, Vint Cerf. So the French judges are pretty remarkable at finding very smart experts. And based on that, they said, Hey, look, Yahoo, you can actually kind of localize your information.

[00:10:45] You can find out whether we’re French or not with a pretty high rate of accuracy, 80, something along those lines. If you can do that, then You should try to do that and stop it when you identify that person as French. Stop sending this kind of material, serving this kind of material to [00:11:00] France. We don’t care what you do in the United States.

[00:11:02] You guys can all rot in heck or whatever, but we’re not claiming sovereignty over the United States version of Yahoo, but we’re claiming sovereignty, digital sovereignty over when the internet intrudes into France. And frankly, all that made sense to me. I think that’s right. And it was also humble. I mean, I want you to make sure that I’ve made this plain.

[00:11:25] The French court didn’t say you have to get rid of it in the United States. It said, we have no power over what goes on in the United States. Okay. We’re just saying, don’t send it into France.

[00:11:36] Luke: That’s fantastic. And that’s exactly what I was hoping you elaborate on, because people look at these things as very black or white, control, what does that mean?

[00:11:44] Right? Like, and in this case, it sounds very much like, well, you’re kind of catering based on where the person’s at and what that legal jurisdiction requires. That totally seems reasonable, especially if, you know, those services or whatever are catering to that market. There’s a practical side of [00:12:00] that.

[00:12:00] Certainly, I mean, you know, we’re not going to go from where we’re at now in the world to just having some one world government where there’s a monolithic framework for everything.

[00:12:10] Anupam: I want to give some credit to Yahoo. Jerry Yang was concerned. This is a stand on principle. He was litigating this on principle.

[00:12:18] It wasn’t because he wanted to, he was making money off of Nazi stuff. I don’t really believe that. Okay. Right. That’s the cynical view. You can take that view, et cetera. What he was saying was, look, we’re going to have to face this question around the world. we’re going to get rid of this here. What’s China going to ask us to get rid of?

[00:12:33] What is Saudi Arabia going to ask us to get rid of, et cetera. We want to take a stand that we’re just governed by the United States and that way we can have the freest internet possible. And so I appreciate that, but it doesn’t make sense. We haven’t given the United States the power to regulate the global information space.

[00:12:52] And even though we might want to, as Americans

[00:12:55] Luke: Or some people think that we do, right? Like, in some cases.

[00:12:59] Anupam: Yeah, [00:13:00] but, you know, we shouldn’t assert that power. I appreciate what Yahoo was trying to do. It wasn’t, like, an evil thing that it was doing, in my opinion. During the course of the litigation, they said, Oh, we’ve actually changed our policy.

[00:13:12] Not because the litigation, we’ve just going to get rid of this stuff. It has nothing to do with the litigation, you know, just a coincidence. Of course. I don’t know anyone who actually believed that. So I don’t know what, the purpose of saying that was. Yeah.

[00:13:30] Luke: A lot of times people focus on how policies will influence the technology, but how do you see AI influencing digital sovereignty policies or the work that you’re doing?

[00:13:42] Anupam: Well, I have to say the AI stuff, I think what I’ve encourage everyone to do is to kind of take a breath and be humble about it. I think most of us don’t know where it’s headed. Don’t know really what the future holds. Don’t know what the right thing is. I’m trying to figure it out. [00:14:00] I think we should listen to each other.

[00:14:02] There’s a lot of kind of assuming bad faith on everyone’s parts. I’m assuming good faith that everyone is involved here, largely in good faith arguments about what they see. Yes, the arguments may turn out to be to their own benefit in any case, but they’re typically good faith arguments nonetheless. I think there’s a lot of important worldviews here.

[00:14:23] for example, don’t know what to think about the open source AI debate, for example. I don’t have a solid view on that. Liability is a very complicated question. So I don’t know if we have a clear vision of what liability will look like as AI moves forward. I think for copyright, for privacy, all those things are complicated questions.

[00:14:47] You know what the impact is going to be on jobs, etc. So we’ve got lots of futures that are yet to come. Unknown. And I think we want to have a conversation among people taking [00:15:00] each other seriously and, you know, assuming the best intent and having debates in this area and then try to work our way forward.

[00:15:08] Luke: Where do you see those conversations happening from your point of view? Like, do you see this happening more like the public forum or in courtroom or?

[00:15:16] Anupam: It’s happening in U. S. courtrooms. Okay, so people may think A. I. is not regulated, but one of the things I like to point out is that there are lots of lawsuits against A.

[00:15:25] I. systems right now. What are those lawsuits based on? They’re based on law. They believe the plaintiffs are bringing in claims. I think many of the claims will be unsuccessful. I mean, I think there are a lot of claims that are existential for A. I. you. Might produce some defamatory material, etc.

[00:15:42] It’s very easy for me to think that some AI will eventually say something about someone that’s untrue. And so, you know, the question how liability works in that context is, painful. And then, you know, issues of privacy, etc. All kinds of existential questions and, of course, copyright infringement as well.

[00:15:59] And [00:16:00] I think those conversations are occurring in American courtrooms are occurring in courtrooms across the world. They’re certainly occurring in legislatures. We had them occurring at the European level, and we could talk about that a little bit. Sure. You know, with the AI Act. And then even at the UN level, there’s been a high level task force that the Secretary General had set up.

[00:16:20] And it’s now starting to issue reports on global ways forward. One member of the Global Task Force wrote a piece for Foreign Affairs saying it’s too early for global approaches to AI, with national approaches to AI. So those, I think they’re happening in the halls of Congress, et cetera. I just also think it’s important for academics to be involved and academics to kind of be open to each other.

[00:16:43] And academia, luckily, we’re pretty respectful of each other within academia, but maybe not enough of those conversations as to what’s the right way forward still occurring. I think that is something that I probably should facilitate more myself as we move forward.

[00:16:59] Luke: So [00:17:00] much human relations with all this stuff in all these different areas that it’s not really going to be one conversation, right?

[00:17:05] It’s going to be a plethora of these things. Coming back to the academia point with all these other areas, you’ve been on the technology side, you’ve been on the legal side, you’ve been also on the academic side, a pretty balanced perspective that you have. How well equipped are they to regulate personally, I’m involved a lot on the cryptocurrency side of this and the blockchain side of this right where it’s very new, very fast moving whole lot of different things.

[00:17:32] And now AI. But like half the time, I’m like, when I see what whether it’s a politician or an agency talking, I’m like, do you even know what this thing is? Then on other, the other end, like I’ll see a court ruling or an opinion where it’s like, this person totally gets it. What’s your take on how well equipped the regulators are on the technology that they’re having

[00:17:54] Anupam: to regulate?

[00:17:55] I’m going to go to the, what you said offhandedly there, when you see an opinion and you were like, Oh, this person [00:18:00] totally gets it. So I just want to say that I’m a big fan of American courts, American trial courts, American appeals courts. I disagree with many decisions, et cetera, but there’s a lot of great judging going out there, a lot of good faith efforts.

[00:18:14] That doesn’t mean that I agree with them all the time, but yeah, so generalist judges can do amazing amount of work. These are people who could make really many multiples in the private sector and they are working. really hard to try to do justice in the country, and I think we should always credit that.

[00:18:32] Now, legislatures, however, I do have my question about the quality sometimes of our legislators, because it seems to be all politics all the time, and so it’s sometimes painful to watch our legislative process. One thing that’s an important issue in AI regulation Is geopolitics, you know, a lot of concern about China fear that if you [00:19:00] regulate harshly, you will liberate China’s companies to march ahead while suffocating American innovation.

[00:19:09] So that geopolitical angle, I think is an important one. I don’t know what to say to it. Well, do you think it’s

[00:19:15] Luke: overblown when people take that position that if we regulate this too much, we’re going to fall behind even geopolitical side or from a adversarial perspective aside, right? Like just from kind of falling behind in competition, do you think that these claims are overblown?

[00:19:31] I mean, obviously like lobbyists have. Influence over the policy discussions and some of this is just things you see elsewhere to do you think that there is a legitimate risk that if we regulate this to heavy handedly that we are going to fall behind or do you think that that’s mostly just political rambling.

[00:19:47] Anupam: So I do think that there are some things that could be really problematic for example a kind of very strong copyright approaches here I think would be. Unnecessary and actually lead to [00:20:00] really awful results. Adobe has its own generative AI for generating images, soon I think video. And Adobe has been licensing images forever, and it’s always built into that license this kind of right.

[00:20:16] But not many companies have been building those kinds of licenses from that start. So what you’re doing is you’re kind of limiting the number of companies that can compete. Only the ones that can access these enormous caches of images will be able to compete. So I do think that we’ve got some issues with making sure that the data is available for, AI systems.

[00:20:40] In general, I, think that most of these systems. You know, as long as they’re not just entirely regurgitating the original, to ask someone to paint in the style of someone else is not an intellectual property infringement, or even write a novel in the style of [00:21:00] Dickens or whatever it might be, if Dickens were in copyright, etc.

[00:21:03] So I think learning from others, which is what we do all the time, the Beatles played covers, you know, so, you know, they learned by playing covers, then they became better. I do think that there is a level to which you can make it law ruinous, but at the same time, you have to figure out what kind of AI do you want to live with?

[00:21:26] That’s the important thing. I think right now we’re trying to figure that out. What do we want our future? With AI to look like, I think there are a lot of really deep questions about humanity that have yet to be answered in this process. I do think that we’ve kept on moving the goalposts for our definition of AI.

[00:21:48] As AI gets ever smarter, we just move the goalposts back further to recognize it as AI, as some kind of actual intelligence system. I think we’re going to [00:22:00] be struggling, but, you know, as policy makers, et cetera, to try to understand the power of these systems. Sam Altman is already talking about CHAT GPT 5.

[00:22:11] It’s only been a year and a few months since the public release of 3. 5. He’s already talking about 5. And the fact that these systems are going to get better and better and better is incredible. It’s also scary as a human being. My brain is what I believe is my humanity. My intelligence is part of my humanity.

[00:22:36] And now it can be done by these systems in some ways better. And so it’s going to cause us to think about our place in the world very deeply. And I think, you know, we’re going to be turning back to our science fiction writers to try to understand how to live with these intelligent systems. With

[00:22:55] Luke: everybody so connected, do you feel optimistic that we’ll be able to kind of work through this [00:23:00] all together as humans globally or, even at the national level?

[00:23:03] Or do you feel like I’m kind of scared about it? Like, this is really nerve wracking kind of thing. So

[00:23:09] Anupam: I think to some extent we’ve seen machines that can do things better than humans, right? Before. And so machines are stronger, more precise, they can replicate things better, et cetera. But we’ve still.

[00:23:22] Kept the importance of humanity, and we’ve said things like, you know, we’ll notify when things are human made, you know, when they’re made by hand, and I think that kind of connection with humanity will still remain important to many people. I am optimistic overall, because I think that there are lots of problems that we haven’t solved that AI will help us solve, you know, these will be scientific problems that that science will be applied to make our world better.

[00:23:50] And so I’m. Overall, very optimistic about this new stage in human evolution the evolution of life. But I think that the [00:24:00] future is being written. Our policy has to be open to embracing that future while trying to figure out what are the gravest risks that that we’re worried about. And so let’s Target those risks in particular, and that is a little bit of what the EU’s AI Act is about.

[00:24:18] The red teaming approach by the Biden administration and the kind of executive order push for these systems to do that, I think, is quite useful as well. But it’s, of course, part of what the major companies were already involved with. This stuff is being rolled out internationally. One worries about it.

[00:24:35] Some jurisdictions that don’t have the governance capacity that become the guinea pigs to this, these systems early on. And I think all of that, I think we have to watch for and as a kind of public, as a, to be aware of what’s going on and call out companies when that’s appropriate and call out governments when that’s appropriate.

[00:24:55] Now this is

[00:24:56] Luke: super interesting. I have to ask you about Section 230 while I got you on [00:25:00] here. Can you summarize down a bit what Section 230 is for our audience? What’s your take on Section 230 as somebody who’s been on all sides of this as we covered earlier?

[00:25:10] Anupam: Section 230 was an early statute part of the Telecommunications Act in 1996, the revision to the Telecommunications Act then, which said basically that Internet companies and other users were not going to be held liable for carrying the speech of others, or promoting or editing even the speech of others, as long as they didn’t develop that speech themselves.

[00:25:33] So as long as you didn’t develop that speech, and you circulated it, and you promoted it even, you wouldn’t be held liable. And so, that was an early decision, it was an architectural decision, about allowing for These kind of intermediaries to develop in cyberspace and removing all the liability that would arise because, boy, when you give people the power to say something, some people are going to abuse that power.[00:26:00]

[00:26:00] That’s been true from the. earliest days of the internet, from bulletin board times onward. And so Section 230 said, we’re not going to hold the bulletin board system liable because some idiot said something idiotic. That I, many people regret today as see as a kind of a failure, but I actually think it was a really critical intervention and it’s allowed the flourishing of the various speech that we have today.

[00:26:27] And think about black lives matter. Okay, it fundamentally depends upon 230. BLM was a hashtag revolution. And what did it consist in? It consisted in claims that Sergeant so and so assaulted me, assaulted so and so excessively. Without any proper basis and so that is defamatory typically right that is like a classic defamation case if it’s false And when you’re the platform, you have no idea what happened with [00:27:00] sergeant So and so in that case and sergeant so and so and the police department say no, that’s utterly not what happened This person was violent or whatever.

[00:27:09] It’s all justified those claims Could be carried safely online by internet platforms without the risk that those platforms would be held liable for any errors that occurred. Somewhere along the line, someone’s going to say something false, even in that context. Okay. Sure. So there will be some actual defamation that occurs in this context because some idiot’s going to say something that is just not proper.

[00:27:33] In that context, and so 230 is critical for, you know, we’ve had police brutality towards African Americans since the origin of the police in the United States. We finally recognized that in society in the last decade because of the internet, and in this case in particular because of an internet law that allowed the internet to circulate this material without fear [00:28:00] that this would be ruinous for the companies that allowed that circulation.

[00:28:04] I’d say the same thing with respect to the Me Too movement. Sexual harassment has been with society since the beginning of time, and we saw again the same kind of discussion, the free and the charges against individuals that were made possible because of this. Those are really critical things, and I think we should be careful when we, both parties, you know, Biden and Trump wanted to get rid of 230.

[00:28:31] And so, you know, Biden still keeps on blaming 230 for lots of things. Certainly 230 is responsible for some ills. I’m not saying that, you know, licensing all the stuff that happens is good. But, boy, the world where you impose liability is a world where Platforms suppress ton of speech.

[00:28:54] Luke: What a note to end on.

[00:28:55] I want to thank you Anupam for coming on for giving us this great conversation. Is there [00:29:00] anything that we didn’t cover that you want to leave people on? And also, where can people find you online if they want to follow you and see

[00:29:06] Anupam: what you got to say? I’m on Threads. I Am on Blue Sky, but I rarely actually post on Blue Sky.

[00:29:12] I am on Twitter, unfortunately, or X as it’s called, just because I have the largest following on, that platform. And so it’s hard for me to leave it. I’m on LinkedIn, so feel free. And so I’m, I’m trying to move to LinkedIn more actually. Excellent. And I have writing lots and lots of writing. So please just read, that.

[00:29:30] Luke: Yeah, make sure you check it out. And thanks again, a new palm. I’m glad to have you back sometime to, to, to do a followup. Thanks so much for joining.

[00:29:36] Anupam: Thanks, Luke. Okay, take care.

[00:29:39] Luke: Thanks for listening

[00:29:40] Anupam: to the Brave

[00:29:41] Luke: Technologist podcast. To never miss an episode, make sure you hit follow in your podcast app.

[00:29:46] If you haven’t already made the switch to the Brave browser, you can download it for free today at brave. com and start using Brave Search, which enables you to search the web privately. Brave also shields you from the ads, trackers, and other creepy stuff following you across the [00:30:00] web.