zkSENSE: a privacy-preserving mechanism for bot detection in mobile devices

This research was conducted by Stan (Jiexin) Zhang, a research intern at Brave and a PhD student at the University of Cambridge, Dr. Panagiotis Papadopoulos, Security Researcher at Brave, and Dr. Ben Livshits, Chief Scientist at Brave. We gratefully acknowledge the valuable feedback of Prof. Alastair R. Beresford of the University of Cambridge.

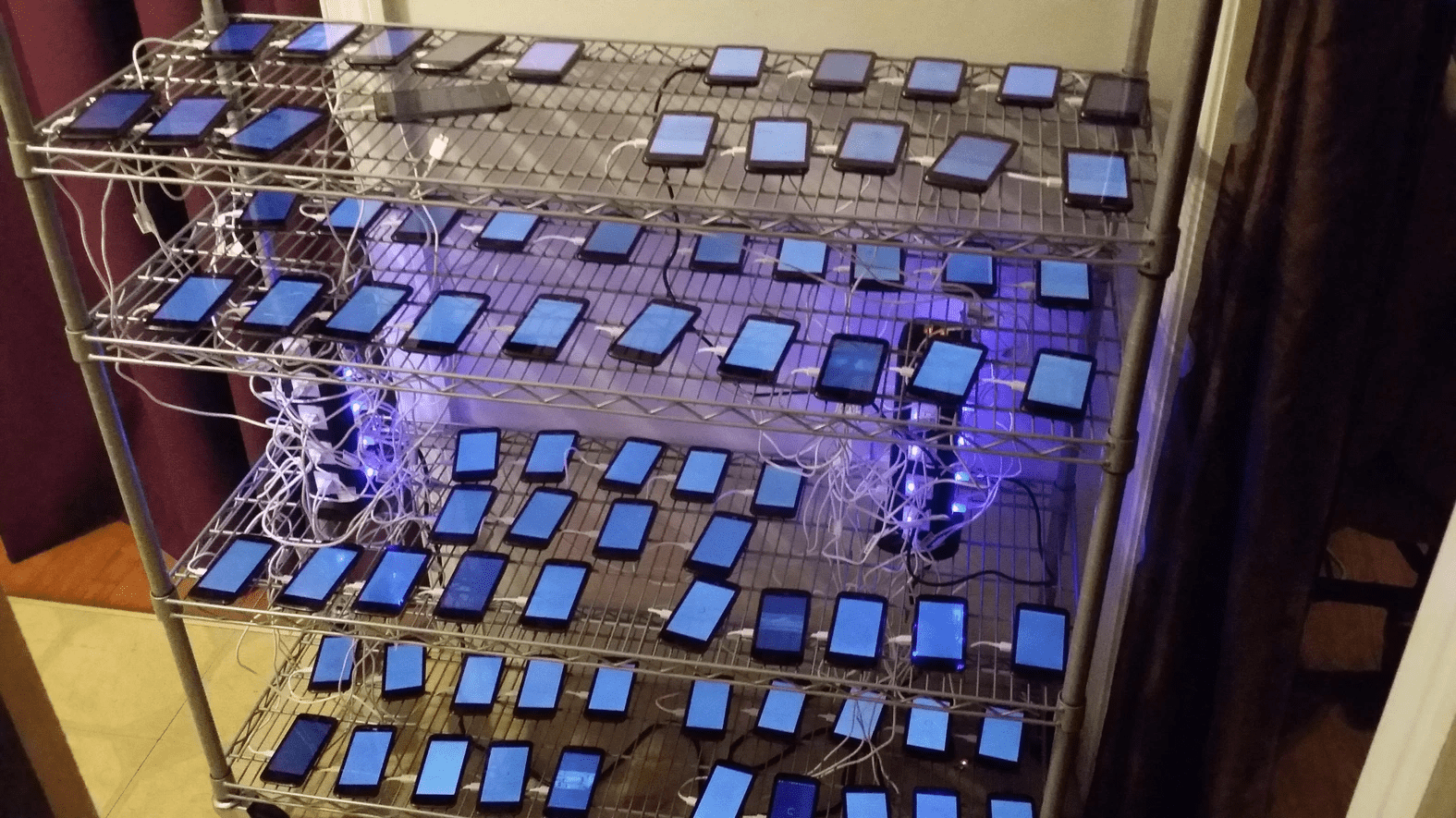

Bots are automated programs that often mimic human behavior for monetary or criminal purposes. They have become a serious and pervasive problem for many industries, especially for the online advertising market. In 2013, it was discovered that the Chameleon botnet harvested around 6 million dollars per month from advertisers. The proliferation and the wide variety of mobile devices created opportunities for fraudsters to gain profit by abusing the ad ecosystem and exploiting low-cost mobile devices. In particular, recent news has reported that phone farmers have been using bots to automate phone clicks and touch movements to generate revenue from ad views.

CAPTCHA systems have been widely deployed to identify and block fraudulent bot traffic. However current solutions, such as Google’s reCAPTCHA v2, either require additional user actions (e.g., image or textual quizzes), which significantly increase user friction, or they monitor the user behavior (invisible reCAPTCHA v3 [5]), thus raising privacy concerns.

The key principles a humanness verification mechanism must have can be summarized in the following:

- Be as frictionless as possible by minimising the impact on user experience.

- Be privacy-preserving: avoid revealing any sensitive user information to remote auditors.

- Application’s code should not be considered as trusted and functionality should not rely on code running within a Trusted Execution Environment (TEE).

In this blogpost, we present zkSENSE: a novel privacy-preserving mechanism for bot detection in smartphones. zkSENSE leverages device sensors, such as the gyroscope and accelerometer, to measure the device’s moving patterns, while either a human or a bot is interacting with an app on the device. This way, zkSENSE can infer whether specific actions in an app (e.g., click/type events) were carried out by a human or a bot with high accuracy even when there are artificial device movements (i.e., device placed on a swinging cradle). To preserve the privacy of the user, zkSENSE does not transfer potentially sensitive sensor data to a remote server. Instead, zkSENSE uses zero-knowledge proofs to demonstrate to a remote server that an action was carried out by a human without revealing any additional information.

We implemented a proof of concept of zkSENSE in Android and we integrated our prototype into a toy app. The following video gives a sneak peek at our zkSENSE system:

The video showcases the functioning of zkSENSE system on a Pixel 3 when it is 1) resting on a platform, 2) held in one hand, and 3) tied to a swing-motion device. When simulating bot touches, the blue dot on the screen visualises the touch position and duration (represented by the radius). As shown in the video, the system can effectively distinguish human clicks from software-simulated touches in all three cases.

zkSENSE: System Design

Threat Model

We assume an attacker whose goal is to perform automated bot operations (e.g., perform ad clicks, create posts/shares/likes in online social networks) for monetary gain. The aim of the defender is to accurately determine whether any particular action was carried out by a human or a bot. A powerful attacker of this kind can perform the following actions:

- Compromise the OS

- Modify the app code

- Run the app in a simulator

- Provide fake sensor outputs

- Understand and try to circumvent the defense mechanisms described in this blog post

Similar to previous work [6], we assume that the smartphone does contain trusted hardware that collects and signs sensor data (see below for more details).

System Overview

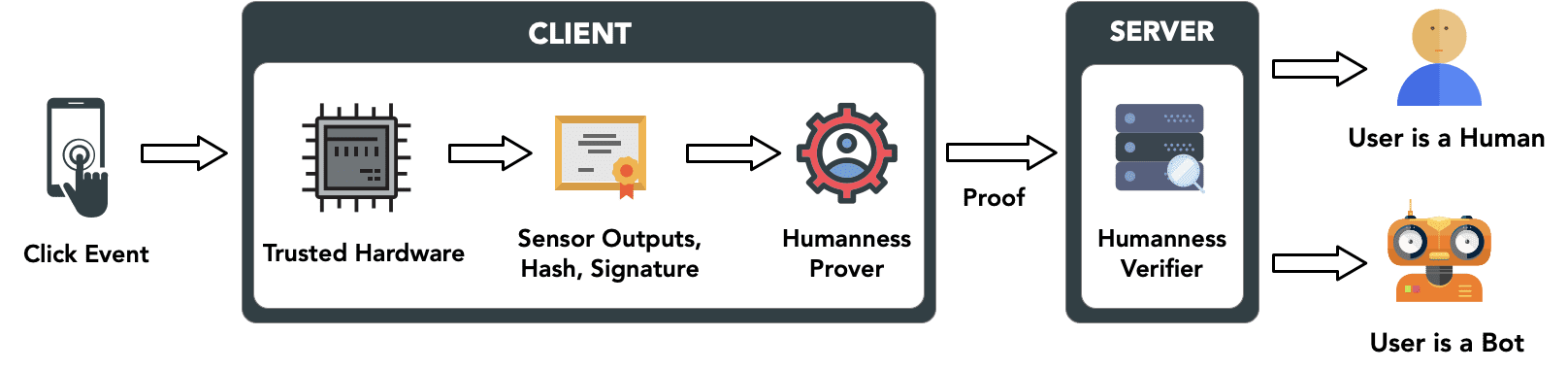

In this section, we present the high-level overview of our approach; Figure 1 presents the zkSENSE architecture. To make the experience frictionless, we focus on an indispensable type of user-device interaction: touch events. Whenever a human touches the mobile display, the force will trigger a device movement, which will be captured by embedded Inertial Measurement Unit (IMU) sensors such as the accelerometer and gyroscope. By contrast, fraudulent bots generally use software-simulated touches to fulfill their task with minimum investment. Since there is no external force exerted by human fingers, there will likely be no noticeable change in the IMU sensor outputs. zkSENSE uses a Machine Learning (ML) model to determine whether the pattern of sensor outputs before, during, and shortly after a touch event comes from a human or a bot.

Nevertheless, a clever attacker can attempt to cheat the system by replaying sensor outputs captured during legitimate human click events. Modern smartphones are typically equipped with trusted computing hardware. For example, Secure Enclave is available on iPhone 5 and newer iPhone models, and ARM TrustZone can be found in many Android handsets. To ensure data integrity, we rely on trusted hardware to hash the sensor outputs and generate a digital signature over the hash and current timestamp. Since fraudulent bots cannot extract the private key from the trusted hardware, they cannot forge the signature. The inclusion of a timestamp ensures that the server can effectively detect replay attacks.

Privacy- Preserving Humanness Detection

Previous research [1-4] has shown that IMU sensor data can expose sensitive user information, and therefore we wish to avoid sending sensor data to remote servers, but instead process the data locally on the smartphone. In this regard, as shown in Figure 1, the ML-based Humanness Prover module is deployed in OS-level on the user side, while the Humanness Verifier module on the server verifies the detection results.

In particular, zkSENSE starts collecting data from the accelerometer and the gyroscope when a user touches the screen, and stops the data collection a few hundred milliseconds after the touch ends. Naturally, the collected data can be divided into two segments: during touch and after touch. For each segment, we extract the four features from the sensor outputs in each axis: minimum value, maximum value, average value, and standard deviation. In addition, we also calculate the consecutive difference of the sensor outputs for each of its axis, and further use the average value and standard deviation of these differences as features to train our machine learning model.

Verifiable evaluation of the ML model

Of course, attackers may be able to modify the app to alter the detection result or bypass the detection model entirely. The user-side Humanness Prover needs to ensure the remote verifier that the transmitted detection result was indeed properly generated by the expected ML model based on genuine data. To provide such verifiable evaluation of the ML model and guarantee the integrity of the result without violating the privacy of the user, we use ZKPs (Zero-Knowledge Proofs).

ZKP is a method to convince another party (the verifier) that one party (the prover) knows a secret value without revealing the actual value. For example, a driving license with a smartcard may contain the drivers date of birth and use ZKP to prove that the driver is over the age of 21, without revealing their actual age or birthday. In zkSENSE we use a variant of ZKP, zk-SNARK (zero-knowledge Succinct Non-interactive ARgument of Knowledge), that does not require a trusted setup to achieve the designated goal.

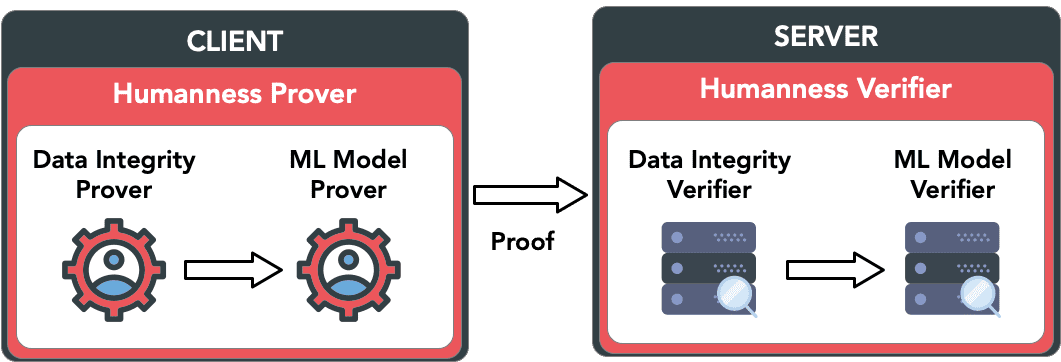

In zkSENSE, the Humanness Prover deployed on the client checks whether a user is a human based on our ML model and generates a ZKP for the Verifier that consists of two modules:

- Data Integrity Prover: This prover checks if the data originates from the embedded IMU sensors. If true, then the generated proof will be passed on to the ML Model Prover.

- ML Model Prover: This prover checks if the ML-based humanness detection model identifies the user as a human. The result and its proof will be stored locally and passed on to the server regularly.

The Humanness Verifier on the server contains two modules: a Data Integrity Verifier and an ML Model Verifier. If both verifications succeed, the server will know that the ML-based humanness detection model identifies the user as a human with trusted sensor outputs, but it will not know the value of those sensor outputs.

The design of zkSENSE effectively removes the trust on the app code and client OS. Malicious users can inspect the implementation details of the Humanness Prover and try to construct a valid proof to fool the server. However, the Humanness Verifier guarantees that their efforts will fail without knowing the private key of the trusted hardware.

Evaluation

Our dataset consists of 7,736 human clicks and 25,921 bot clicks from 6 Android device models: Google Pixel 3, Samsung Galaxy S9, Samsung Galaxy S8, Samsung Galaxy S6, OnePlus 6, and Huawei Mate 20 Lite. We collected this dataset by instrumenting the developer’s version of Brave browser mobile app, which we shared with a controlled user-set of 6 colleagues for 3 weeks. We trained several classification classifiers using the features described earlier. Table 1 presents the result. Here, the recall means the proportion of correctly identified bot clicks over all bot clicks. In other words, the recall indicates the ability to capture bot clicks. In most use cases of zkSENSE, the recall is more important than the precision because the latter can be compensated using a fallback CAPTCHA system.

| Classifier | F1 (weighted) | Recall |

|---|---|---|

| SVM | 0.92 | 0.96 |

| Decision Tree (9 Layers) | 0.95 | 0.96 |

| Random Forest (8 Trees, 10 Layers) | 0.97 | 0.96 |

| KNN | 0.93 | 0.94 |

| Neural Network (Linear Kernel) | 0.88 | 0.95 |

| Neural Network (ReLU Kernel) | 0.92 | 0.96 |

Table 1: Accuracy of the tested classifiers

As shown in Table 1, the four classifiers (SVM, decision tree, random forest, and neural network with ReLU kernel) have similar performance in terms of recall. In zkSENSE, we choose SVM as the underlying model due to its simplicity in verification. The reason is that the ZKP system used in zkSENSE, zk-SNARK, is trivial to prove arithmetic operations such as multiplication and addition, but it is relatively expensive to do range proofs. This suits the SVM model well since it only needs to do range proof once.

Conclusion

To prevent bots from abusing online services, a reliable way to determine whether a given action was derived from an actual human or not, is needed. Current solutions, such as Google reCAPTCHA, (i) either require additional user actions (e.g., solve mathematical or image quizzes) or (ii) need to send the attestation data back to the server, thus raising significant privacy concerns. Here we propose zkSENSE: a novel type of bot detection scheme which relies on Zero Knowledge proofs, and it is both frictionless and privacy-preserving. zkSENSE leverages sensor outputs in common user-device interactions to identify bots and exploits ZKP to build trust and preserve user privacy. We believe zkSENSE is a better alternative to current privacy-invasive bot detection systems for mobile devices: it does not require any additional user operations, and it works not only for mobile websites but also for native apps. We are continuously evaluating and improving zkSENSE.

Reference

[1] Mehrnezhad, Maryam, Ehsan Toreini, Siamak F. Shahandashti, and Feng Hao. “Touchsignatures: identification of user touch actions and pins based on mobile sensor data via javascript.” Journal of Information Security and Applications 26 (2016): 23-38.

[2] Malekzadeh, Mohammad, Richard G. Clegg, Andrea Cavallaro, and Hamed Haddadi. “Protecting sensory data against sensitive inferences.” In Proceedings of the 1st Workshop on Privacy by Design in Distributed Systems, p. 2. ACM, 2018.

[3] San-Segundo, Rubén, Henrik Blunck, José Moreno-Pimentel, Allan Stisen, and Manuel Gil-Martín. “Robust Human Activity Recognition using smartwatches and smartphones.” Engineering Applications of Artificial Intelligence 72 (2018): 190-202.

[4] Jiexin Zhang, Alastair R. Beresford, and Ian Sheret. “SensorID: Sensor Calibration Fingerprinting for Smartphones.” In 2019 IEEE Symposium on Security and Privacy (SP), IEEE, May 2019, pp. 638–655.

[5] Google, “Invisible reCAPTCHA”, https://developers.google.com/recaptcha/docs/invisible, 2019

[6] Meriem Guerar, Alessio Merlo, Mauro Migliardi, and Francesco Palmieri. Invisible CAPPCHA: A usable mechanism to distinguish between malware and humans on the mobile IoT.Computers and Security, 78:255–266, 2018.

* Figure 1 and 2 use resources from svgrepo.com under license Creative Commons BY 4.0.