Nebula: Brave’s differentially private system for privacy-preserving analytics

This post describes work by Ali Shahin Shamsabadi, Peter Snyder, Ralph Giles, Aurélien Bellet, and Hamed Haddadi. This post was written by Brave’s Privacy Researcher Ali Shahin Shamsabadi.

We are excited to introduce Nebula, a novel and best-in-class system developed by Brave Research for product usage analytics with differential privacy guarantees. Nebula combines several cutting-edge privacy enhancement technologies (including verifiable user-side thresholding and sample-and-threshold differential privacy) to get useful insights about the product usage/feedback of a population (i.e., many Brave users) without learning anything about the choices of individuals in the population (i.e., each individual Brave user).

Nebula benefits users: it guarantees user privacy without requiring prohibitive trust assumptions. Nebula also enables Brave to achieve better utility and lower server-facing overhead compared to existing solutions based on the local and shuffling models of differential privacy.

Brave Research shares the implementation so that other projects can use it.

Product analytics

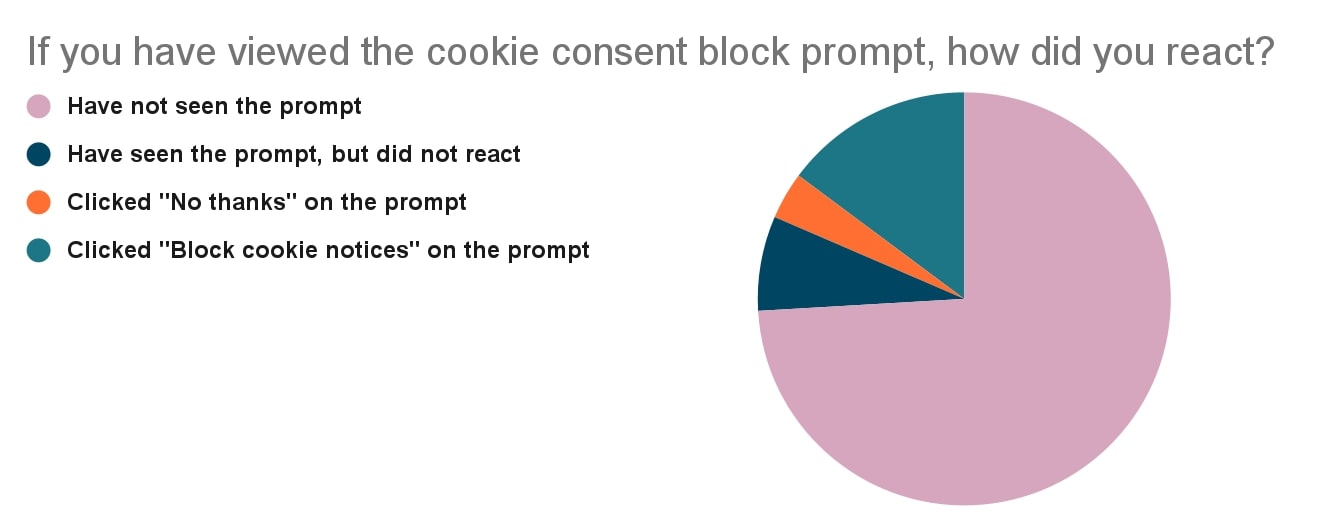

Developers would need to learn how Brave features are being used by many users to be able to improve user experience on the Web. For example, Brave developers would need to learn how well Brave privacy-preserving Cookie Banner removal works by asking the question “If you have viewed the cookie consent block prompt, how did you react?” However, each individual user’s choice needs to be private. As shown in the below chart, Nebula allows Brave to determine how much and where resources need to be allocated to block privacy-harming cookies for users and understand the popularity order of reactions without learning the reaction and cookie banners that each individual user sees. Nebula’s differential privacy guarantees ensure that the same conclusions, for example most users react with blocking cookie notices when seeing cookie banners, can be made independently of whether any individual user opts into or opts out of contributing their answer to the above question.

Nebula enables developers to learn how Brave features are being used and to improve user experience on the Web, without risking users’ privacy.

Nebula put Brave users first in product analytics

-

Nebula guarantees users’ privacy. Brave cares about safeguarding the privacy of our users. Nebula provides formal differential privacy guarantees ensuring that the product analytics leak as little as possible about each user’s choice. Brave does not even get to know the presence or absence of a user, let alone learning their data. Differential privacy is a robust, meaningful, and mathematically rigorous definition of privacy.

-

Nebula enables better auditability, verifiability, and transparency. Nebula’s usage means that users do not have to blindly trust Brave. Users are always in control of whether they want to contribute any data, know which data they are contributing, and when they are contributing. We open-sourced Nebula as part of the Brave’s STAR. In previous blog posts, we’ve talked about how we originally designed our Privacy-Preserving Analytics system and then improved it with Brave’s STAR. Now we’ve developed a further improvement using differential privacy, giving our users unmatched privacy protection.

-

Nebula is efficient with very little user-facing cost. Local computation on the user side similarly requires very little effort.

Nebula’s inherently efficient design ensures that companies of all sizes can affordably protect their users’ privacy in their product analytics while also minimising negative environmental impacts.

Nebula design

At a high level, Nebula works as follows:

-

Local and verifiable user-side sampling. The user decides to submit their data with a small probability, otherwise they abstain from submitting their real data.

-

Local user data encryption. Users that do decide to participate (based on the outcome of their coin flip) locally encrypt their value using Brave’s STAR secret sharing scheme. This secret sharing process has a negligible (compute/memory) overhead for users.

-

Dummy data. A small number of users submit additional dummy data to obscure the distribution of uncommon (i.e., unrevealed) values.

-

Data aggregation. Brave recovers values and their associated counts using the inverse of the secret-sharing system which ensures that Brave cannot learn user values unless sufficiently many users sent the exact same value.

Nebula satisfies differential privacy

Nebulas enforces formal differential privacy protection for users through three steps: 1) the uncertainty of any particular user contributing any value; 2) blinding Brave to uncommon values through the secret-sharing mechanism (i.e., thresholding); 3) having some users contribute precisely defined amounts of dummy data to obscure the distribution of uncommon values.

Acknowledgements

We would like to thank Shivan Kaul Sahib, Darnell Andries, and François Marier for their feedback and for their help with infrastructure implementation and production maintenance.