MELTing Point: Mobile Evaluation of Language Transformers

This post describes work done by Stefanos Laskaridis, Kleomenis Katevas, Lorenzo Minto and Hamed Haddadi. This post was written by Machine Learning Researcher Stefanos Laskaridis.

TL;DR: As we are entering the new era of hyper-scale models, it is indispensable to maintain the ability to host AI locally, for maintaining privacy and sustainability. This is the first study that measures the deployability of Large Language Models (LLMs) at the consumer edge, exploring the potential of running differently-sized models on smartphones and edge devices 1 instead of the cloud.

Introduction

Large Language Models, such as Llama-3, Mixtral, or ChatGPT, have recently revolutionized the machine learning landscape, enabling use-cases that were previously unfathomable, including intelligent assistants (including our own Brave Leo), creative writing, as well as agent-based automations (Brave Search Integration in Leo, for instance). At the same time, devices in our pockets have been getting increasingly capable 2, integrating evermore powerful System-on-Chips (SoCs). Based on this trend, and maintaining our true commitment to preserving users’ privacy, we explore the feasibility of deploying LLMs on device, a model in which user prompts and LLM outputs never leave the device premises.

To do this, the Brave Research team has built their very own LLM benchmarking infrastructure, named BLaDE, for measuring the latency, accuracy, memory, and energy impact of running LLMs on-device. At the same time, acknowledging that these models are oftentimes too large, we leverage edge devices to accelerate execution locally, which can be co-located with smart devices at the consumer side 3. This can also be deployed with our most recent BYOM (Bring Your Own Model) self-hosting option.

Our experience has shown that while the GenAI ecosystem is growing increasingly large, local on-device deployment is still in its infancy and remains very heterogeneous across devices. Deploying LLMs on device is possible, but with noticeable impact latency, comfort and accuracy, especially on mid-tier devices. However, hardware and algorithmic breakthroughs can significantly change the cost of execution and user Quality of Experience (QoE). At the same time, SLMs (Small Language Models) 4 are gradually making their appearance, tailored for specific downstream tasks.

Brave Research Device Lab

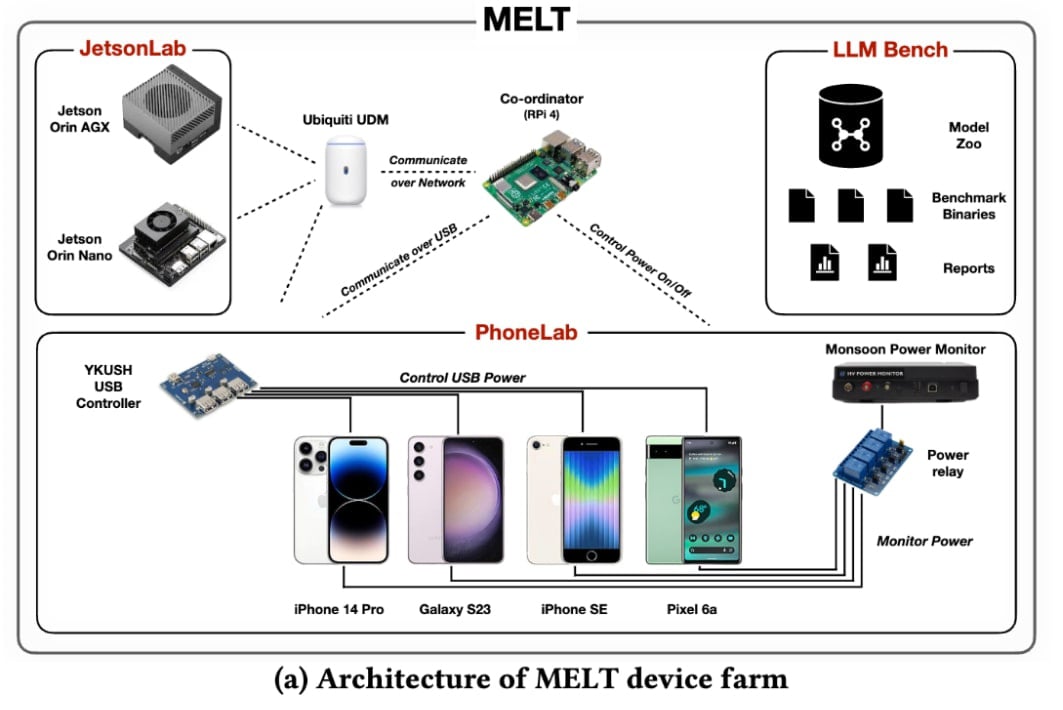

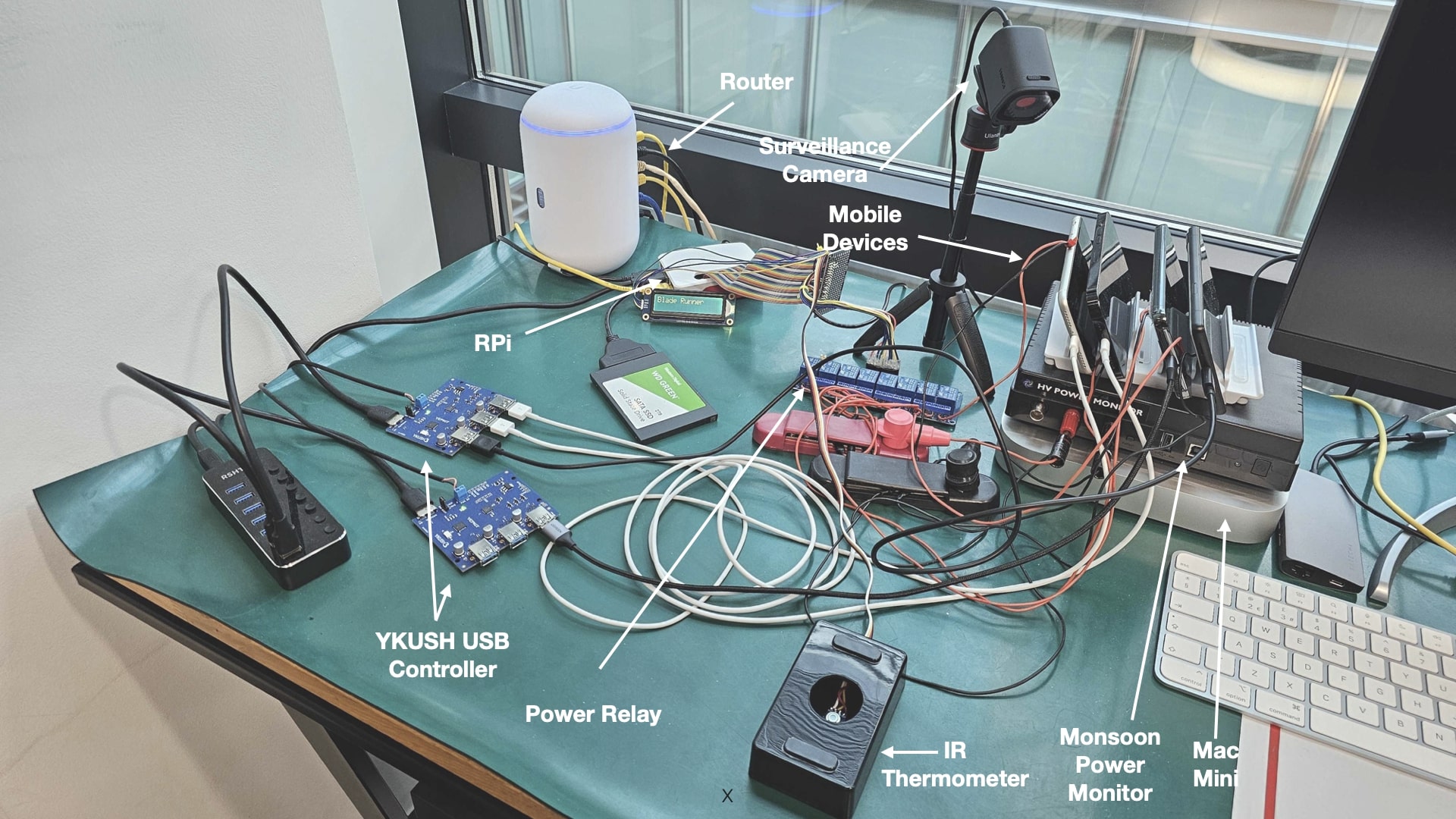

BLaDE (BatteryLab Device Evaluation) is a state-of-the-art benchmarking infrastructure that is capable of automating the interaction with mobile devices for performance and energy measurements. It can be used for neural or more generic browser tasks. MELT is the component responsible for the benchmarking of neural workloads on various devices.

MELT adopts a server-client architecture, with the central coordinating process being responsible for the following:

-

Organizing the execution of the benchmarking suite;

-

Scheduling and dispatching jobs to connected devices;

-

Controlling the downstream interaction with the application;

-

Monitoring their runtime, temperature and energy consumption;

-

Tracing the events of interest in the downstream task and capturing the associated device behavior.

To this end, it integrates the following components:

-

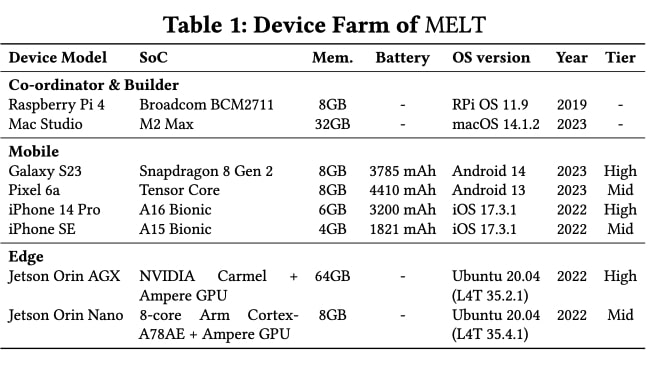

a Raspberry Pi 4 8GB, which adopts the role of the coordinator;

-

a Mac Mini, for building packages;

-

a Monsoon power monitor connected over a Raspberry Pi GPIO addressable relay to control power to individual mobile devices;

-

a programmable YKUSH USB Switchable Hub for communicating and selectively disabling USB power to devices;

-

a Flir One edge wireless camera along with an custom-built IR thermometer (based on MLX90614) for monitoring temperature of the device; and last,

-

a set of mobile devices, shown on Table 1, which have undergone a battery bypass procedure.

In parallel, the coordinator is connected over Ethernet to the same network as our Nvidia Jetson boards with SSH access to them. Jetsons are able to provide power and temperature metrics through SysFS probes available.

MELT Workflow

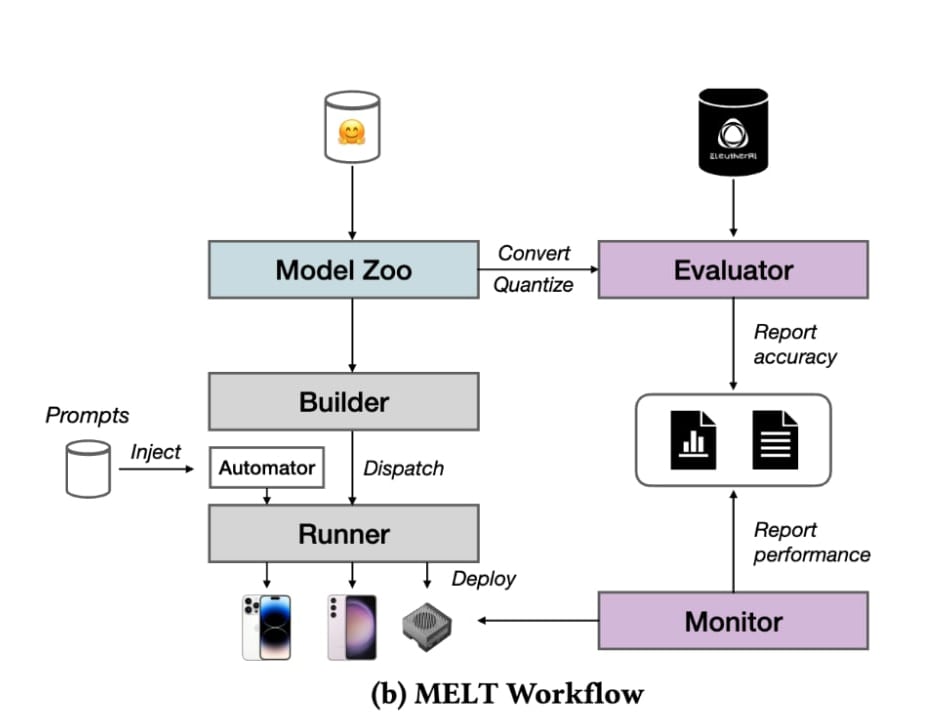

The measurement workflow we follow is depicted in Figure 1b. MELT’s infrastructure consists of the following components:

-

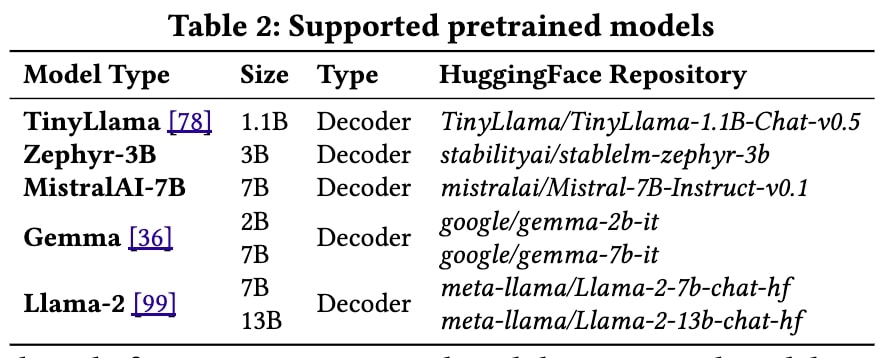

Model Zoo, responsible for the download and compilation/quantization of models. We used the models of Table 2.

-

Evaluator, responsible for the evaluation of the accuracy degradation of models due to their conversion/quantization. We used the datasets of Table 4.

-

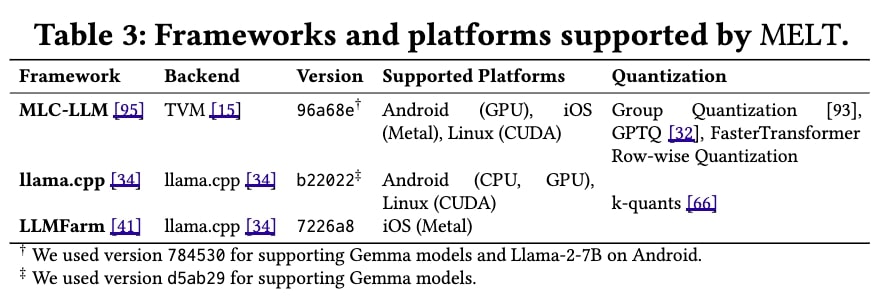

Builder, responsible for the compilation of the respective benchmarking suite backend, shown in Table 3, namely llama.cpp and MLC-LLM.

-

Runner, responsible for the deployment, automation and runtime of the LLM on the respective device. Integrated devices are shown on Table 1.

-

Monitor, responsible for the fine-grained monitoring of resource and energy consumption of the execution

Result Highlights

Below, we provide the most interesting results of our analysis and their consequences for on-device deployment and future product research.

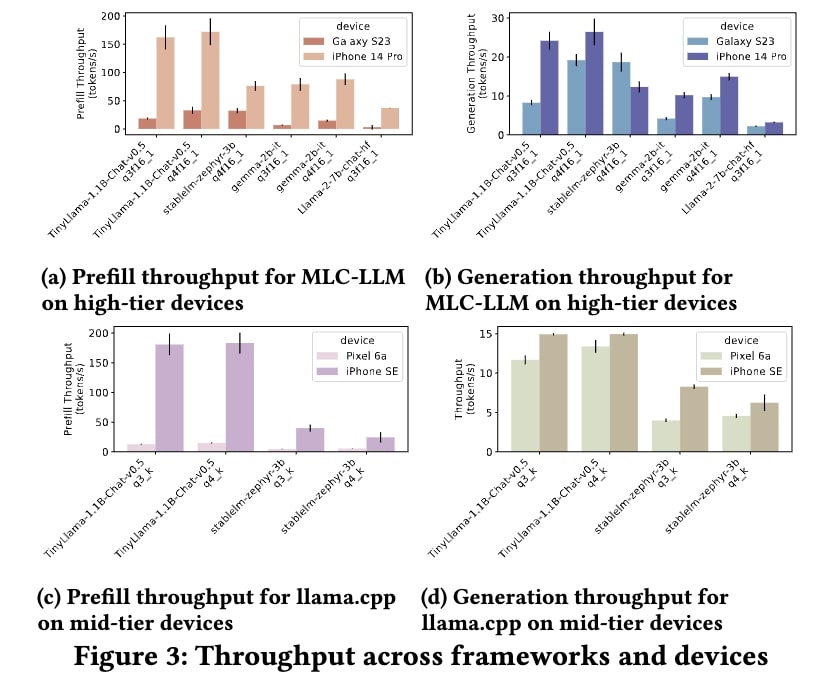

Throughput and Energy per Device

Figure 3 illustrates the prefill and generation throughput of various models on different devices when used in a conversational setting. Prefill refers to the preparation and processing of input tokens before actual generation begins (e.g., tokenization, embedding, KV cache population), while generation refers to the autoregressive generation (i.e., decoding) of output tokens. Throughput expresses the rate of token ingestion/production, measured in tokens/sec.

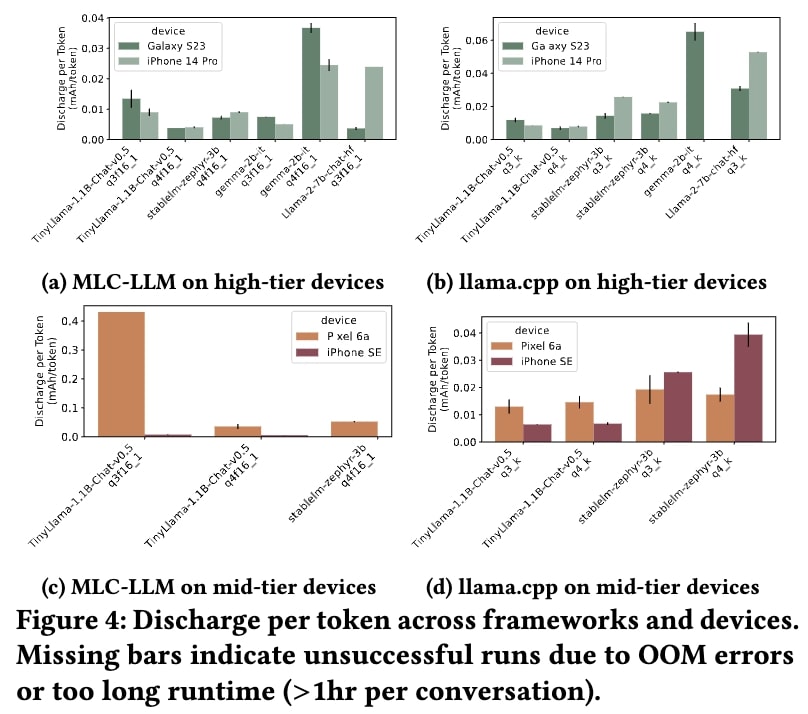

Figure 4 depicts the discharge rate per token generated, for different model, device and framework combinations. This is expressed in mAh/token.

Insights: We witnessed a quite heterogeneous landscape in terms of device performance across models. Prefill operations, typically compute bound, are much higher than generation rates, which are typically memory bound. MLC-LLM generally offered higher performance, compared to llama.cpp, but at the cost of model portability. Surprisingly, 4-bit models ran faster than their 3-bit counterparts, but at the expense of higher memory consumption, which caused certain models to run out of memory during runtime. Last, the Metal-accelerated iPhones showed higher throughput rates compared to the OpenCL-accelerated Android phones, for the case of MLC-LLM.

Energy-wise, larger networks offer larger discharge rates, as traffic between on-chip and off-chip memory consumes a significant amount of energy. Indicatively, if we deployed Zephyr-3B (4-bit quantized) on S23 on MLC-LLM, iPhone Pro on MLC-LLM and iPhone 14 Pro on llama.cpp, it would take 542.78, 490.05 and 590.93 prompts on average until their battery was depleted. Last, the CPU execution offered lower energy efficiency, attributed to the latency of running inference compared to accelerated execution.

Quality Of Experience

In real-world settings, running a large model on device can adversely affect the user experience and render the device unstable or unusable. There are largely three dimensions to consider:

-

Device responsiveness refers to the general stability and reliability of the device during runtime of LLM inference. Factors that affected the device responsiveness included long model loading times, out-of-memory errors which killed the application and device restarts, causing effectively a DoS by rebooting the device.

-

Sustained performance refers to the device’s ability to offer the same performance throughout the runtime of multiple inference requests. We noticed in our experiments that performance under sustained load was not stable, but fluctuated. Reasons for this behavior include DVFS, thermal throttling and different power profiles, along with potential simultaneous workloads.

-

Device temperature does not only affect device performance, but also user comfort. Devices nowadays come in various forms, but mostly remain passively cooled. Therefore, heat dissipation is mainly facilitated by the use of specific materials and heat management is governed by the OS. The power draw did cause temperatures to rise to uncomfortable levels, reaching 47.9°C after one full conversation with Zephyr-3B (4-bit) model on the iPhone 14 Pro.

Insights: Tractability of the LLM inference workload does not imply deployability.

Accuracy Impact of Quantization

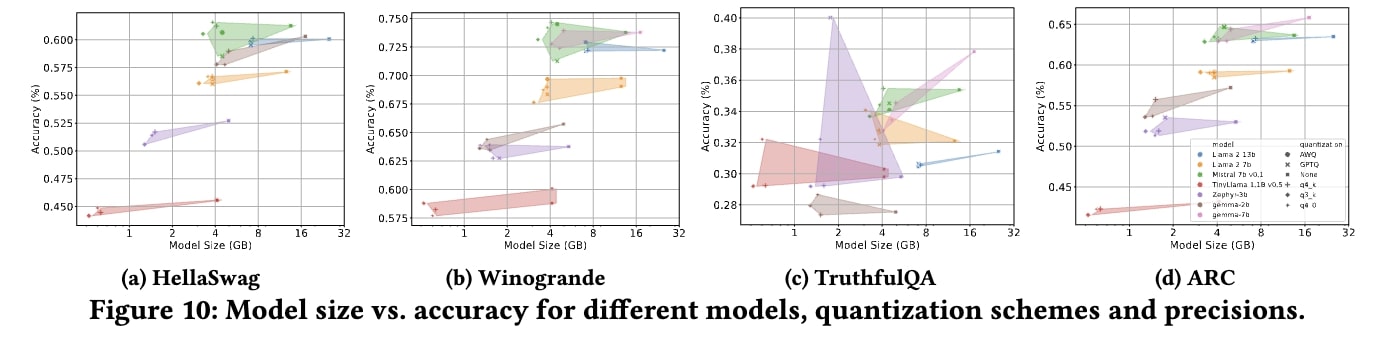

Today’s LLMs are quite large in size. At the same time, the memory that most devices come equipped with is in the region of 6 - 12 GB. This means that deploying such models on device is usually only possible through compression. Quantization is a compression technique which lowers the precision with which weights and activations are represented. However, this happens at the expense of accuracy. We measured the impact of using different model architectures and sizes, quantization schemes and precision to the accuracy of four natural language tasks (HellaSwag, Winogrande, TruthfulQA, ARC-{E,C}).

Insights: The most evident performance difference comes from the model architecture and parameter size, and this performance difference persists across datasets. In terms of quantization schemes, it is obvious that bit width is correlated to model size, but also to accuracy, i.e., lower bit width means higher error rate.On the other hand, there was no single quantization scheme that performed uniformly better across the board. For larger models (≥7B

parameters), AWQ 5 and GPTQ 6 performed slightly better, at the expense of elevated model sizes.

Offloading to Edge Devices

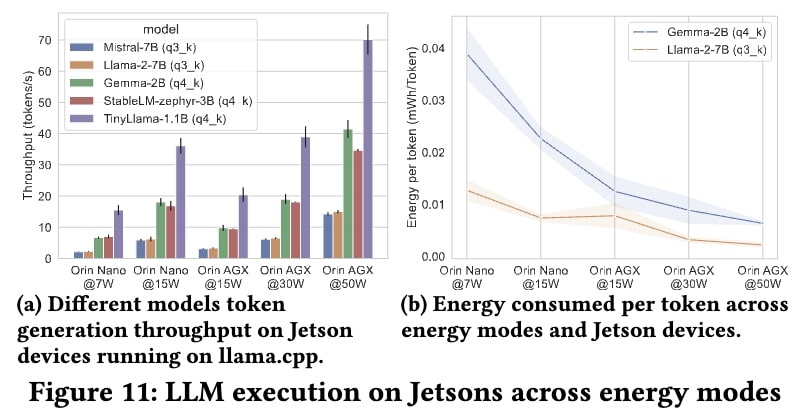

Since QoE and accuracy of LLMs is impacted by running on device, as evidenced earlier, we visit an alternative model of offloading computation to nearby devices at the consumer edge. Such devices may be a dedicated accelerator (e.g., an Edge-AI Hub) or another edge device (e.g., a Smart TV or a high-end router). For this reason, we employ two Jetson devices, namely Nano (mid-tier) and AGX (high-tier) to check the viability of this paradigm.

Insights: Overall, generation throughput is significantly higher than the equivalent mobile runtime, and this runtime can also be sustained for longer periods. Indicatively, for Zephyr-7B (4-bit), the average throughput is 3.3× and 1.78× higher, for prefill and generation respectively. At the same time, we witnessed that the energy efficiency is moving the same direction as the device’s TDP.

Key Takeaways

This work highlights the potential and challenges of deploying Large Language Models on consumer mobile devices for both iOS and Android ecosystems. While advancements in hardware and algorithmic breakthroughs such as quantization show promise, on-device deployment currently impacts latency, device stability, and energy consumption. Offloading computation to local edge devices offers a viable alternative, providing improved performance and energy efficiency. Continued innovation in both hardware and software will be crucial for making local AI deployment practical, preserving user privacy, and enhancing sustainability.

Read More

We are glad to announce that the associated paper has been accepted for publication at the 30th Annual International Conference on Mobile Computing and Networking (ACM MobiCom'24).

You can find more information in our pre-print: https://arxiv.org/abs/2403.12844

The codebase of MELT can be found here: https://github.com/brave-experiments/MELT-public.