Deep learning is a subset of machine learning (ML). It’s a multi-layered (or “deep”) architecture for artificial intelligence (AI) models. ML is generally concerned with how machines learn: feeding AI models lots of data, and using learning algorithms to train them to improve capabilities and outputs over time. If the end goals of ML are to classify data, discover patterns and insights, and make predictions/decisions, deep learning is a specific ML technique used to reach those goals.

What are deep neural networks?

Since deep learning falls under the larger umbrella of machine learning, it still relies on core ML principles such as training and optimizing AI models. Deep learning is a type of machine learning that employs “deep” neural networks to enable more complex problem-solving.

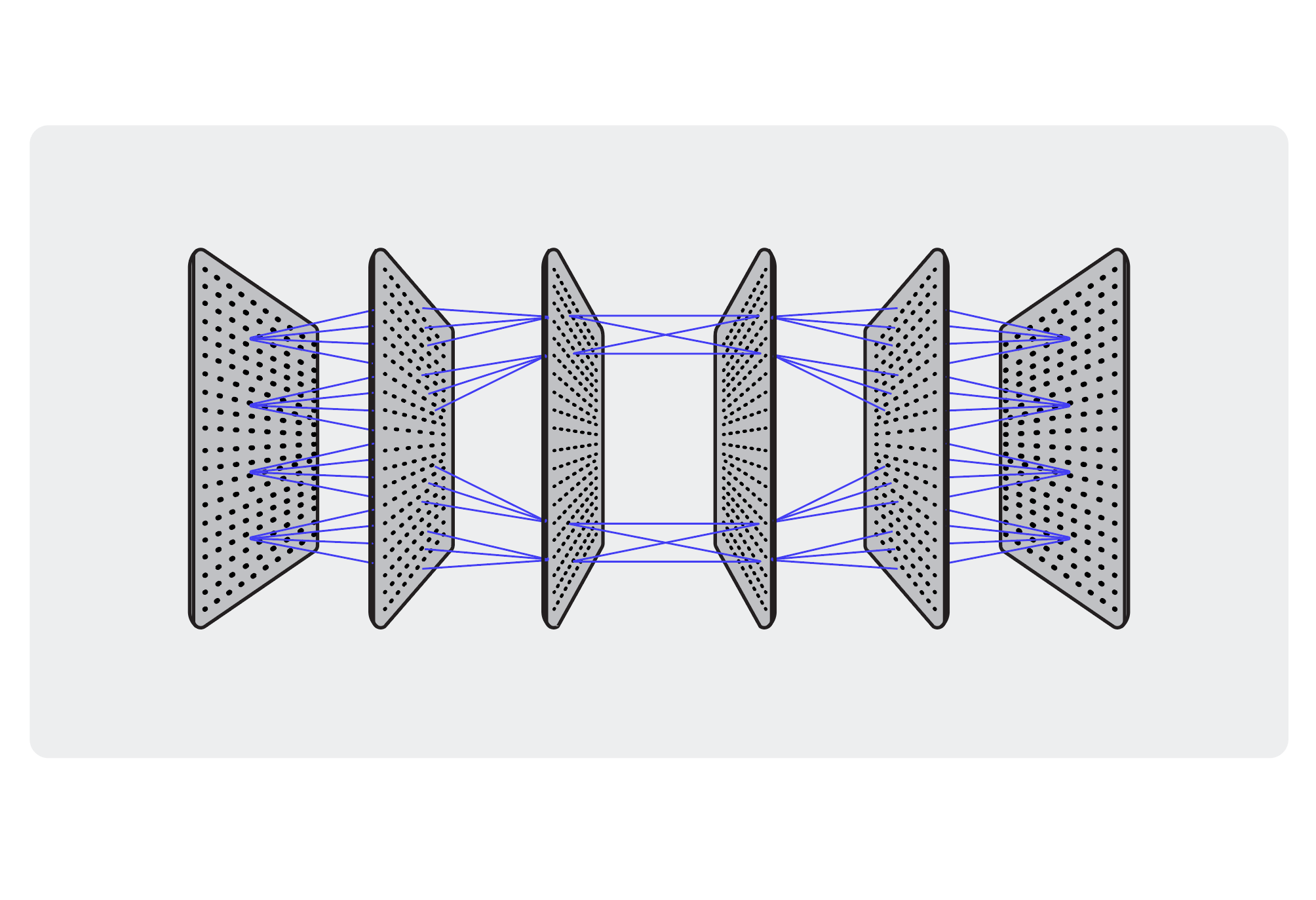

Where a basic machine learning model might only have two layers—input and output—deep neural networks have additional “hidden” layers in between. By leveraging many layers, deep learning models can extract higher-level features and better capture complex relationships in data.

The hierarchy of deep neural network architecture

Structurally speaking, deep learning involves multiple different layers stacked atop one another. With this structure, deep learning models can learn to understand “hierarchical” patterns in data—meaning each layer is responsible for one task, and the following layer can build upon the discoveries of the previous one.

Each layer develops a rich understanding of how to optimize for its particular task, which makes the entire ML model capable of discovering sophisticated features and relationships that humans often miss. This makes deep learning models very good for tasks like image/speech recognition, or the ability to process human language.

An example of how deep learning architecture works

Imagine a deep learning AI designed for image recognition. While a deep learning AI like this will have many layers, each trained to perform a specific task, we’ll group some together here for purposes of illustration.

- Low-level layers: these layers tackle the most basic tasks to identify simple patterns, such as edges, corners, curves, and basic textures.

- Mid-level layers: these layers learn to combine the features from low-level layers to detect more meaningful patterns like shapes (e.g. circles), or parts of objects (e.g. wheels).

- High-level layers: low- and mid-level features are combined to recognize even more complex patterns, like specific objects (e.g. cars or faces).

By definition, a deep learning network must have at least three layers: the input layer, the output layer, and—in between them—at least one hidden layer. But many deep learning AIs have dozens or even hundreds of layers. The more hidden layers a deep learning AI has, the “deeper” it’s said to be, and the more complexity and abstraction it’s capable of.

It’s also worth noting that more complex deep learning architectures require more computing power, larger data sets, and efficient algorithms to work through all the many layers of computation they perform.

Neural networks: how deep learning layers communicate with each other

The process we’ve been describing—where each layer relays its discoveries to the next—makes use of what’s called an artificial neural network. An artificial neural network is a mathematical model (or, in this case, a computer network) designed to mimic the structure and function of the human brain.

If deep learning is the what, then neural networks are the how. The network structure enables communication between the different layers of a deep learning system—so the distinct layers can communicate and accomplish things together.

The general structure of neural networks

Each layer of a neural network is made up of several interconnected-but-individual nodes (or “neurons”) that perform certain functions. And each neuron is interconnected with neurons in adjacent layers through weighted connections. Each neuron receives input from the previous layer, performs its own computation, and passes the output to the neurons in the next layer.

What’s really clever, though, is that the weight of each connection between neurons is learned during the training process. The weights are usually randomized initially, allowing the network to adjust the weighting as it learns to minimize errors and maximize the desired outcome. Of course, the weights and biases of artificial neurons can also be manually adjusted with different techniques as part of the training process.

The main types of deep neural networks

So far we’ve described the general structure of deep learning neural networks, but there are many different types of deep neural networks as well—each suited to better handle certain types of data, and perform certain tasks. While not an exhaustive list, here are a few key neural network architectures used in deep learning AI.

Convolutional neural networks (CNNs)

CNNs are designed to detect spatial patterns and visual features from grid-like data, like images. CNNs are most commonly used for computer vision tasks, like image classification and object detection. Recently, CNNs have gained popularity, and revolutionized the field of deep learning AI with their ability to learn and extract meaningful features from images. This, in turn, has led to advancements in fields like medical imaging, facial recognition, and autonomous driving.

Recurrent neural networks (RNNs)

RNNs are designed to handle sequential data (as opposed to grid-like data) by using connections that allow information to be recorded and analyzed over time. RNNs have neural connections that form “cycles,” which allow them to maintain an internal state (or “memory”) of time passing. This makes RNNs well-suited to process audio and natural language, for tasks like voice recognition, translation, sentiment analysis, and chatbot functionality (because they can preserve context across a conversation).

Transformer networks

Transformer networks represent the state of the art in natural language processing (think AI chatbots like ChatGPT). Transformers use “self-attention mechanisms” to process data in parallel, rather than sequentially like RNNs. This makes it possible for transformers to weigh the importance of different elements in input data (like different words in a sentence), and address relevant information more effectively. Transformers are also able to handle much more data in much larger contexts.

This ability has led to transformer networks being widely adopted by the AI industry (specifically with language models and language understanding). They represent a popular and powerful alternative to RNNs when it comes to tasks like text generation, sentiment analysis, translation, and language understanding (though RNNs still excel at audio recognition and tasks involving time-sensitive or sequential data). “Vision transformers” have also recently become popular in performing many image recognition tasks.

Generative adversarial networks (GANs)

GANs are somewhat unique in that they consist of two neural networks: a generator (which creates fake data samples) and a discriminator (which tries to distinguish between real and fake data). These two neural networks are pitted against each other (“adversarially”) in a process that produces increasingly convincing fake data, and an increasingly reliable detection system.

GANs have recently gained relevance in applications that generate realistic content for artistic purposes (like AI models that generate images from text inputs), as well as for less-desirable purposes, like AI-generated “deepfakes.”

The importance of data quality

This article covers just a few examples of the neural network architectures that make up deep learning ML models. The field is rapidly growing and evolving, and deep learning remains at the forefront of AI development due to its ability to produce highly sophisticated AI systems. Of course, as with any AI model, the quantity and quality of data used for training is crucially important. Check out the Brave Search API to learn more about Brave’s high-quality data feeds for AI.